By default, the GenAI Node is disabled. To enable the node, see Dynamic Conversations Features.

Add GenAI Node to a Dialog Task

Steps to add the GenAI Node to a Dialog Task:

- Go to Build > Conversational Skills > Dialog Tasks and select the task that you are working with.

- You can add the GenAI Node just like any other node. You can find it in the main list of nodes.

Configure GenAI Node

Component Properties

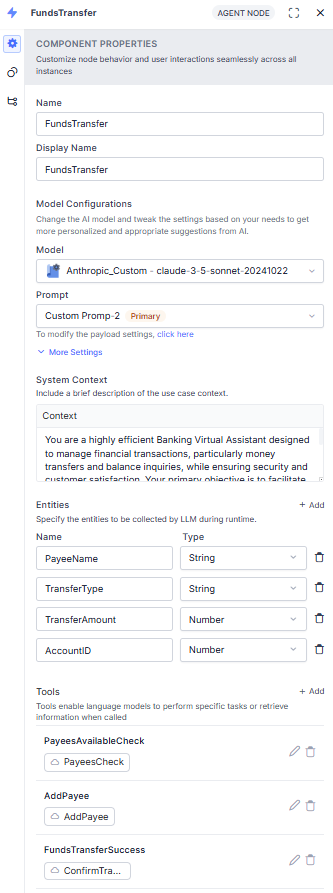

The component properties empower you to configure the following settings. The changes made within this section affect this node across all instances and dialog tasks.

General Settings

It allows you to provide a Name and Display Name for the node. The node name cannot contain spaces.

Advance Settings

Adjusting the settings allows you to fine-tune the model’s behavior to meet your needs. The default settings work fine for most cases. You can tweak the settings and find the right balance for your use case. A few settings are common in the features, and a few are feature-specific:

- Model: The selected model for which the settings are displayed.

- Prompt/Instructions or Context: Add feature/use case-specific instructions or context to guide the model.

- Conversation History Length: This setting allows you to specify the number of recent messages sent to the LLM as context. These messages include both user messages and virtual assistant (VA) messages. The default value is 10. This conversation history can be seen from the debug logs.

Note: Applicable only if you are using a custom prompt. - Temperature: The setting controls the randomness of the model’s output. A higher temperature, like 0.8 or above, can result in unexpected, creative, and less relevant responses. On the other hand, a lower temperature, like 0.5 or below, makes the output more focused and relevant.

- Max Tokens: It indicates the total number of tokens used in the API call to the model. It affects the cost and the time taken to receive a response. A token can be as short as one character or as long as one word, depending on the text.

- Fallback Behavior: Fallback behavior lets the system determine the optimal course of action on LLM call failure or the Guardrails are violated. You can select fallback behavior as:

- Trigger the Task Execution Failure Event

- Skip the current node and jump to a particular node. The system skips the node and transitions to the node the user selects. By default, ‘End of Dialog’ is selected.

Dialog Details

Under Dialog Details, configure the following:

Pre-Processor Script

This property helps execute a script as the first step when the GenAI Node is reached. Use the script to manipulate data and incorporate it into rules or exit scenarios as required. The Pre-processor Script has the same properties as the Script Node. Learn more.

To define a pre-processor script, click Define Script, add the script you want to execute, and click Save.

System Context

Add a brief description of the use case context to guide the model.

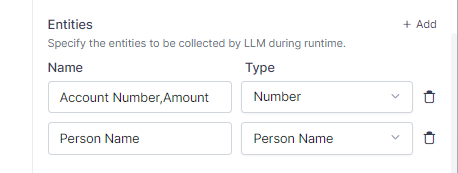

Entities

Specify the entities to be collected by LLM during runtime. In the Entities section, click + Add, enter an Entity Name, and select the Entity Type from the drop-down list.

Most entity types are supported. Here are the exceptions: custom, composite, list of items (enumerated and lookup), and attachment. See Entity Types for more information.

Tools

Tools allow the GenAI Node to interact with external services, fetching or posting data as needed. When called, they let language models perform tasks or obtain information by executing actions linked to Script and Service nodes. Users can add a maximum of 5 tools to each node.

Note: The GenAI Node supports tool-calling with custom JavaScript prompts in non-streaming mode.

Click + Add to open the New Tool creation window.

Define the following details for tool configuration:

- Name: Add a meaningful name that helps the language model identify the tool to call during the conversation.

- Description: Provide a detailed explanation of what the tool does to help the language model understand when to call it.

- Parameters: Specify the inputs the tool needs to collect from the user. Define up to 10 parameters for each tool and mark them as mandatory or optional.

- Name: Enter the parameter name.

- Description: Enter an appropriate description of the parameter.

- Type: Select the parameter type (String, Boolean, or Integer).

- Actions: These are the nodes that the XO Platform executes when the language model requests a tool call with the required parameters. Users can add up to 5 actions for each tool. These actions are chained and executed sequentially, where the output of one action becomes the input for the next.

- Node Type: Select the node type (Service Node and Script Node) from the dropdown.

- Node Name: Select a new or existing node from the dropdown.

- Response Path: The final output from the action nodes is required to be added as a Response Path for the Platform to understand where to look for the actual response in the payload. Choose the specific key or path that defines the output.

- Choose transition: Define the behavior after tool execution:

- Default: Send the response back to the LLM. It is mandatory to have a Response Path in this case.

- Exit Node: Follow the transitions defined for the GenAI Node.

- Jump to a Node: You can jump to any node defined in the same dialog flow.

Jump to a Node Transition

The Jump-to-Node transition option enables the creation of sophisticated dialog workflows. It allows for dynamic branching based on tool execution results, significantly streamlining the design of complex conversation flows.

Key Updates:

- Added “Jump-to-Node” transition option for tools within the Agent node.

- Enables seamless navigation to specified target nodes following tool execution.

- Maintains complete session-level conversation history across all transitions.

- Supports transitions to both orphan nodes and sub-dialogs.

- Ensures full backward compatibility with existing tool configurations.

Rules

Add the business rules that the collected entities should respect. In the rules section, click + Add, then enter a short and to-the-point sentence, such as:

- The airport name should include the IATA Airport Code;

- The passenger’s name should include the last name.

There is a 250-character limit to the Rules field, and you can add a maximum of 5 rules.

Exit Scenarios

Specify the scenarios that should terminate entity collection and return to the dialog task. This means the node ends interaction with the generative AI model and returns to the dialog flow within the XO Platform.

Click Add Scenario, then enter short, clear, and to-the-point phrases that specifically tell the generative AI model when to exit and return to the dialog flow. For example, Exit when the user wants to book more than 5 tickets in a single booking and return "max limit reached".

There is a 250-character limit to the Scenarios field, and you can add a maximum of 5 scenarios.

Post-Processor Script

This property initiates the post-processor script after processing every user input as part of the GenAI Node. Use the script to manipulate the response captured in the context variables just before exiting the GenAI Node for both the success and exit scenarios. The Pre-processor Script has the same properties as the Script Node. Learn more.

Important Considerations

If the GenAI Node requires multiple user inputs, the post-processor is executed for every user input received.

To define a post-processor script, click Define Script and add the script you want to execute.

Instance Properties

Configure the instance-specific fields for this node. These apply only for this instance and will not affect this adaptive dialog node when used in other tasks. You must configure Instance Properties for each task where this node is used.

User Input

Define how user input validation occurs for this node:

- Mandatory: This entity is required and must be provided before proceeding.

- Allowed Retries: Configure the maximum number of times a user is prompted for a valid input. You can choose between 5-25 retries in 5-retries increments. The default value is 10 retries.

- Behavior on Exceeding Retries: Define what happens when the user exceeds the allowed retries. You can choose to either End the Dialog or Transition to a Node – in which case you can select the node to transition to.

User Input Correction

Decide whether to use autocorrect to mitigate potential user input errors:

- Autocorrect user input: The input will be autocorrected for spelling and other common errors.

- Do not autocorrect user input: The user input will be used without making any corrections.

Advanced Controls

Configure advanced controls for this node instance as follows:

Interruptions Behavior

To define the interruption handling at this node. You can select from the below options:

- Use the task level ‘Interruptions Behavior’ setting: The VA refers to the Interruptions Behavior settings set at the dialog task level.

- Customize for this node: You can customize the Interruptions Behavior settings by selecting this option and configuring it. You can choose whether to allow interruptions or not, or to allow the end user to select the behavior. You can further customize Hold and Resume behavior. Read the Interruption Handling and Context Switching article for more information.

- Analytics – Containment Type: Select one of the below options to determine how to treat user-abandoned conversations.

- Use task-level default settings: This refers to the Containment Type settings set at the dialog level.

- Customize for this node: You can customize the Containment Type for this node by selecting this option. You can choose whether to Self Service or Drop-off in case of user-abandoned conversations.

- Custom Tags

Add Custom Meta Tags to the conversation flow to profile VA-user conversations and derive business-critical insights from usage and execution metrics. You can define tags to be attached to messages, users, and sessions. See Custom Meta Tags for details.

Voice Call Properties

Configure Voice Properties to streamline the user experience on voice channels. You can define prompts, grammar, and other call behavior parameters for the node. This node does not require Initial Prompts, Error Prompts, and grammar configuration.

See Voice Call Properties for more information on setting up this section of the GenAI Node.

Connections Properties

Note: If the node is at the bottom of the sequence, then only the connection property is visible.

Define the transition conditions from this node. These conditions apply only to this instance and will not affect this node’s use in any other dialog. For a detailed setup guide, See Adding IF-Else Conditions to Node Connections for a detailed setup guide.

Custom Prompt for GenAI Node

Custom prompts are required to work with the GenAI Node for tool-calling functionality. Platform users can create custom prompts using JavaScript to tailor the AI model’s behavior and generate outputs aligned with their specific use case. By leveraging the Prompts and Requests Library, the users can access, modify, and reuse prompts across different GenAI Nodes. The custom prompt feature enables users to process the prompt and variables to generate a JSON object, which is then sent to the configured language model. Users can preview and validate the generated JSON object to ensure the desired structure is achieved. This step involves adding a custom prompt to the GenAI node to tailor its behavior or responses according to specific requirements. By customizing the prompt, you can guide the AI to generate outputs that align more closely with the desired outcomes of your application.

GenAI Node with custom prompt supports configuring pre and post-processor scripts at both node and prompt levels. This enables platform users to reuse the same custom prompt across multiple nodes while customizing the processing logic, input variables, and output keys for each specific use case.

When you configure pre and post-processor scripts at both node and prompt levels, the execution order is: Node Pre-processor → Prompt Pre-processor → Prompt → Prompt Post-processor → Node Post-processor.

Note: Node-level pre and post-processor scripts support Bot Functions in addition to content, context, and environment variables.

Let’s review a sample prompt written in JavaScript and follow the step-by-step instructions to create a custom prompt.

Sample JavaScript

let payloadFields = {

model: "claude-3-5-sonnet-20241022",

max_tokens: 8192,

system:`${System_Context}.

${Required_Entities && Required_Entities.length ?

`**Entities Required for the Use Case*: You are instructed to collect the from the List: ${Required_Entities}

**Entity Collection Rules**:

- Do not Prompt the user if the any of entities data is already captured or available in the context`: ''}

**Instructions To be Followed**:: ${Business_Rules}

**Tone and Language**::

- Maintain a professional, helpful, and polite tone.

- Support multiple languages if applicable to cater to diverse users.

**Output Format**::

- You Should Always STRICTLY respond in a **STRINGIFIED JSON format** to ensure compatibility with downstream systems.

- The response JSON must include the following keys:

- "bot": A string containing either:

- A prompt to collect missing required information

- A final response

- "entities": An array of objects containing collected entities in format:

[

{

"key1": "value1",

"key2": "value2"

}

]

- **conv_status**: String indicating conversation status:

- "ongoing": When conversation requires more information

- "ended": When one of these conditions is met:

- All required entities are collected

- All required functions/tools executed successfully

- Final response provided to user

- when one of the Scenarios Met from ${Exit_Scenarios}.`,

messages: []

};

// Check if List_of_Tools exists and has length

if (Tools_Definition && Tools_Definition.length) {

payloadFields.tools = Tools_Definition.map(tool_info => {

return {

name: tool_info.name,

description: tool_info.description,

input_schema: tool_info.parameters

};

});

}

// Map conversation history to context chat history

let contextChatHistory = [];

if (Conversation_History && Conversation_History.length) {

contextChatHistory = Conversation_History.map(function(entry) {

return {

role: entry.role === "tool" ? "user" : entry.role,

content: (typeof entry.content === "string") ? entry.content : entry.content.map(content => {

if (content.type === "tool-call") {

return {

"type": "tool_use",

"id": content.toolCallId,

"name": content.toolName,

"input": content.args

}

}

else {

return {

"type": "tool_result",

"tool_use_id": content.toolCallId,

"content": content.result

}

}

})

};

});

}

// Push context chat history into messages

payloadFields.messages.push(...contextChatHistory);

Add user input to messages

let lastMessage;

if (contextChatHistory && contextChatHistory.length) {

lastMessage = contextChatHistory[contextChatHistory.length-1];

}

if (!lastMessage || (lastMessage && lastMessage.role !== "tool")) {

payloadFields.messages.push({

role: "user",

content: `${User_Input}`

});

}

// Assign payloadFields to context

context.payloadFields = payloadFields;

Add Custom Prompt

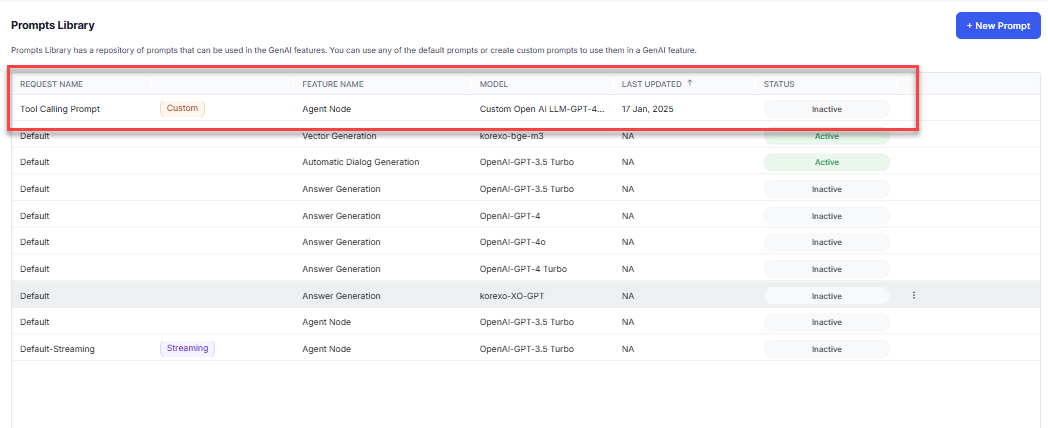

The process involves creating a new prompt in the Prompts Library and writing the JavaScript code to generate the desired JSON object. Users can preview and test the prompt to ensure it generates the expected JSON object. Once the custom prompt is created, users can select it in the GenAI Node configuration to leverage its functionality.

For more information on Custom Prompt, see Prompts and Requests Library.

To add a GenAI Node prompt using JavaScript, follow the steps:

- Go to Build > Natural Language > Generative AI & LLM.

- On the top right corner of the Prompts and Requests Library section, click +Add New.

- Enter the prompt name. In the feature dropdown, select GenAI Node and select the model.

- The Configuration section consists of End-point URLs, Authentication, and Header values required to connect to a large language model. These are auto-populated based on the input provided while model integration and are not editable.

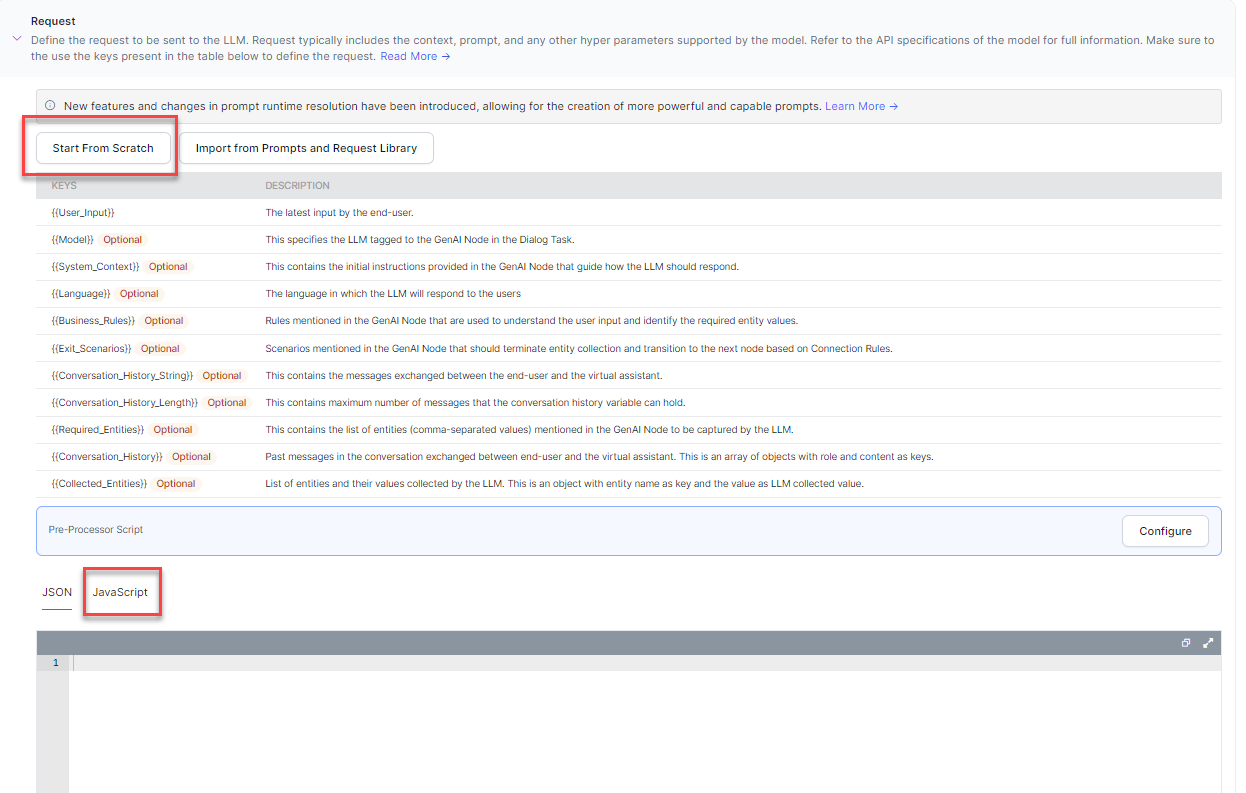

- In the Request section, click Start from Scratch. Learn more.

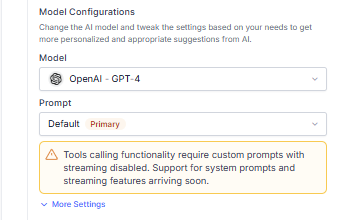

- Ensure the Stream Response is disabled, as the GenAI Node supports tool-calling with custom JavaScript prompts in non-streaming mode.

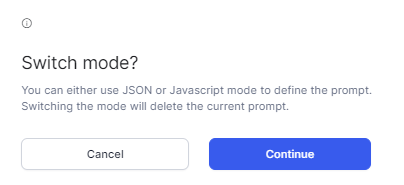

- Click JavaScript. The Switch Mode pop-up is displayed. Click Continue.

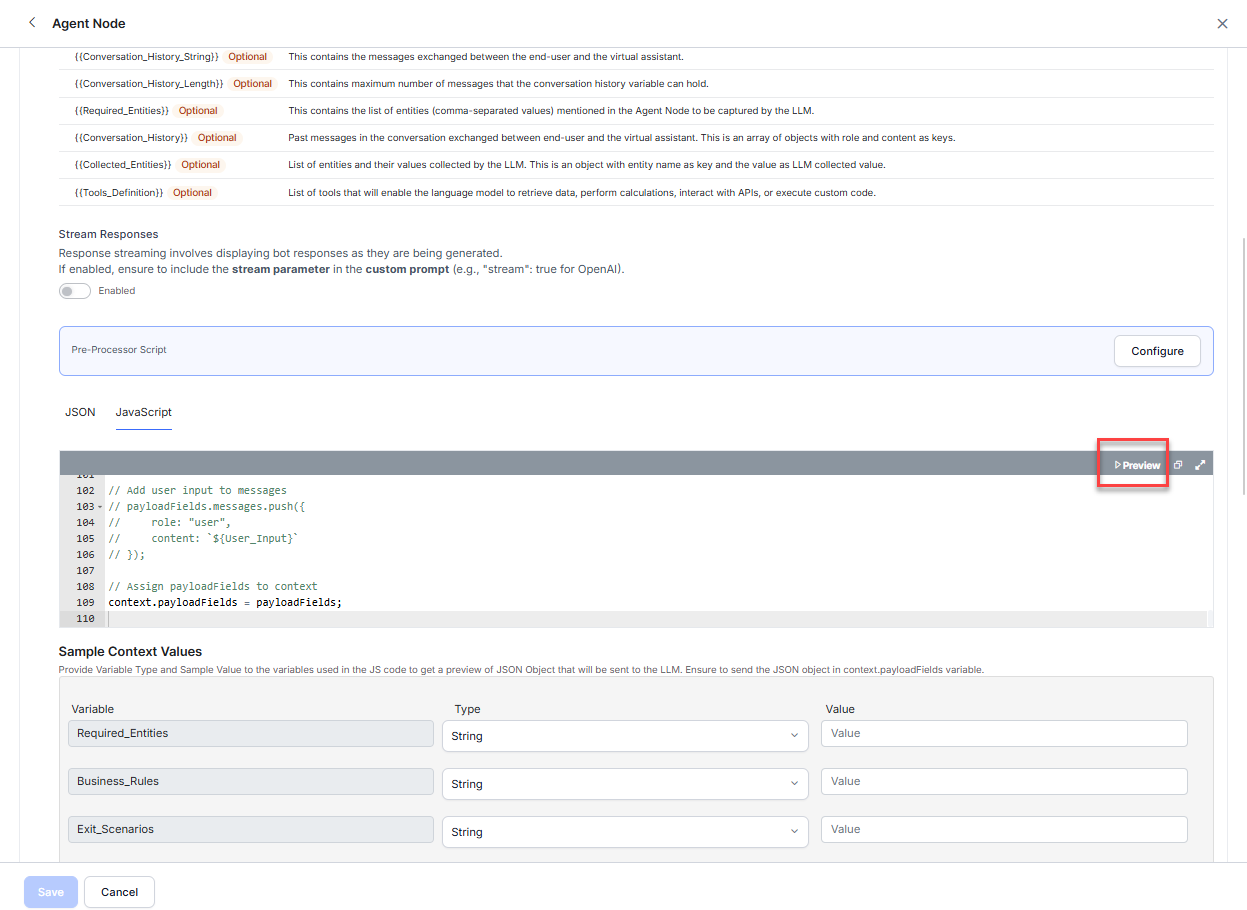

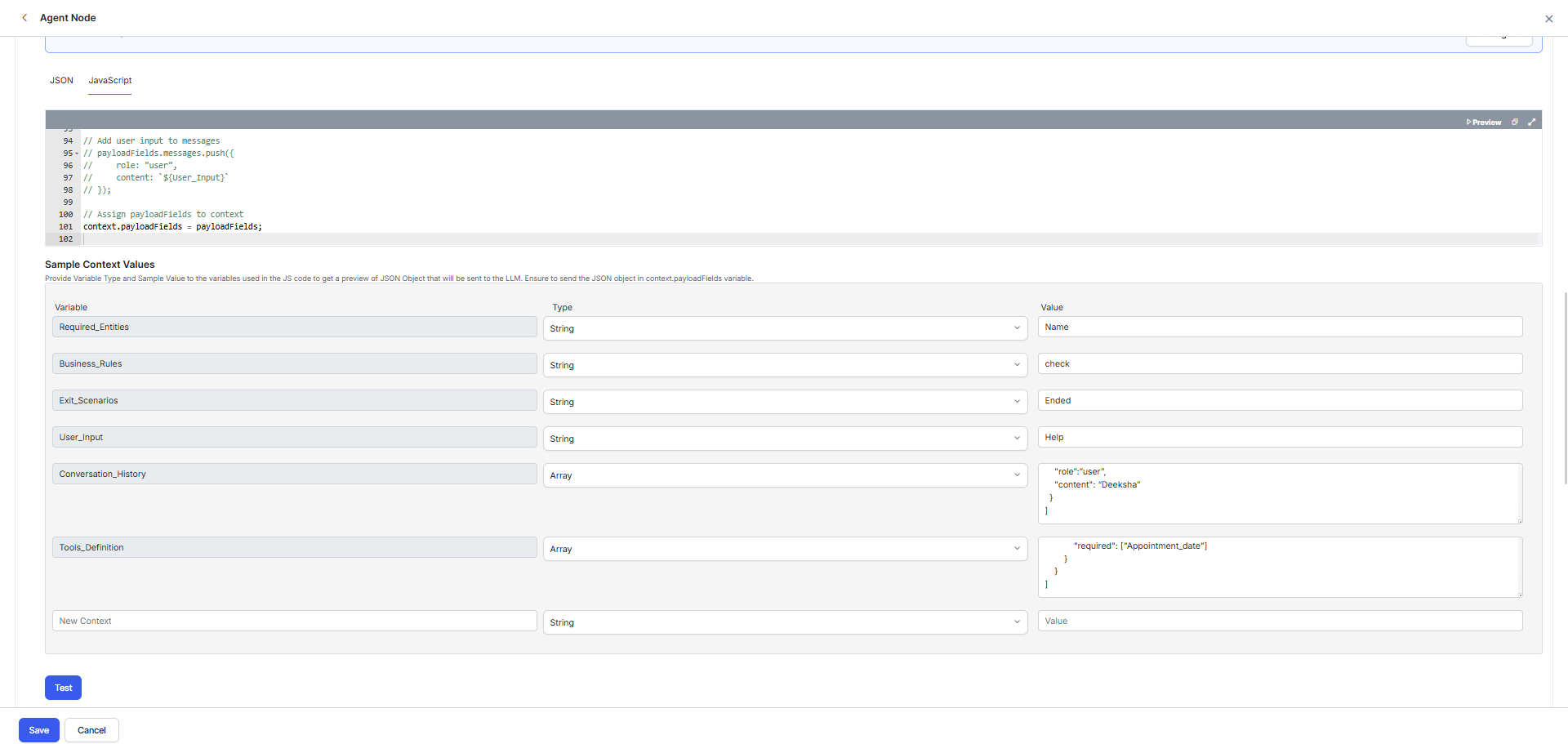

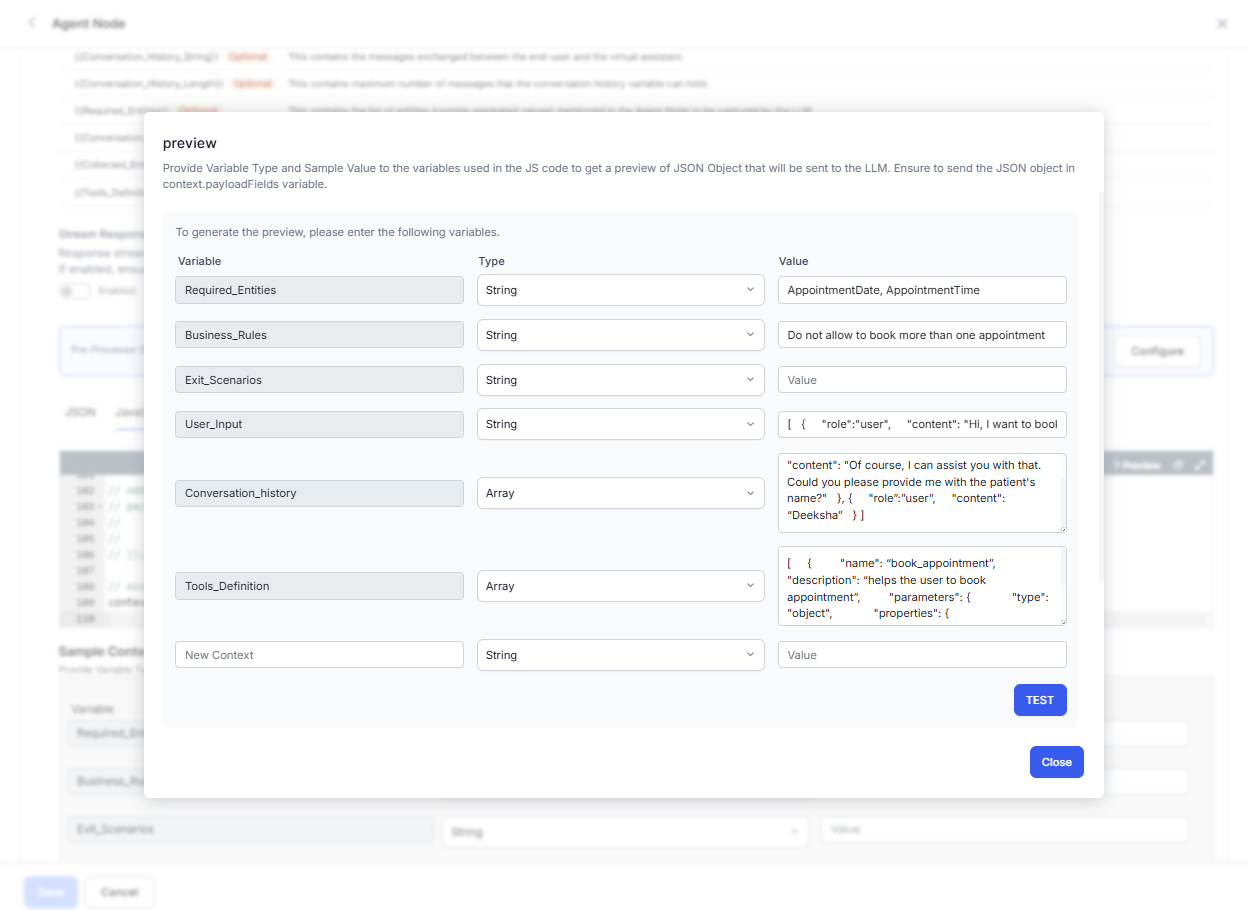

Note: The GenAI Node supports tool-calling with custom JavaScript prompts in non-streaming mode. - Enter the JavaScript. The Sample Context Values are displayed. To know more about context values, see Dynamic Variables.

- Enter the Variable Value and click Test. This will convert the JavaScript to a JSON object and send it to the LLM.

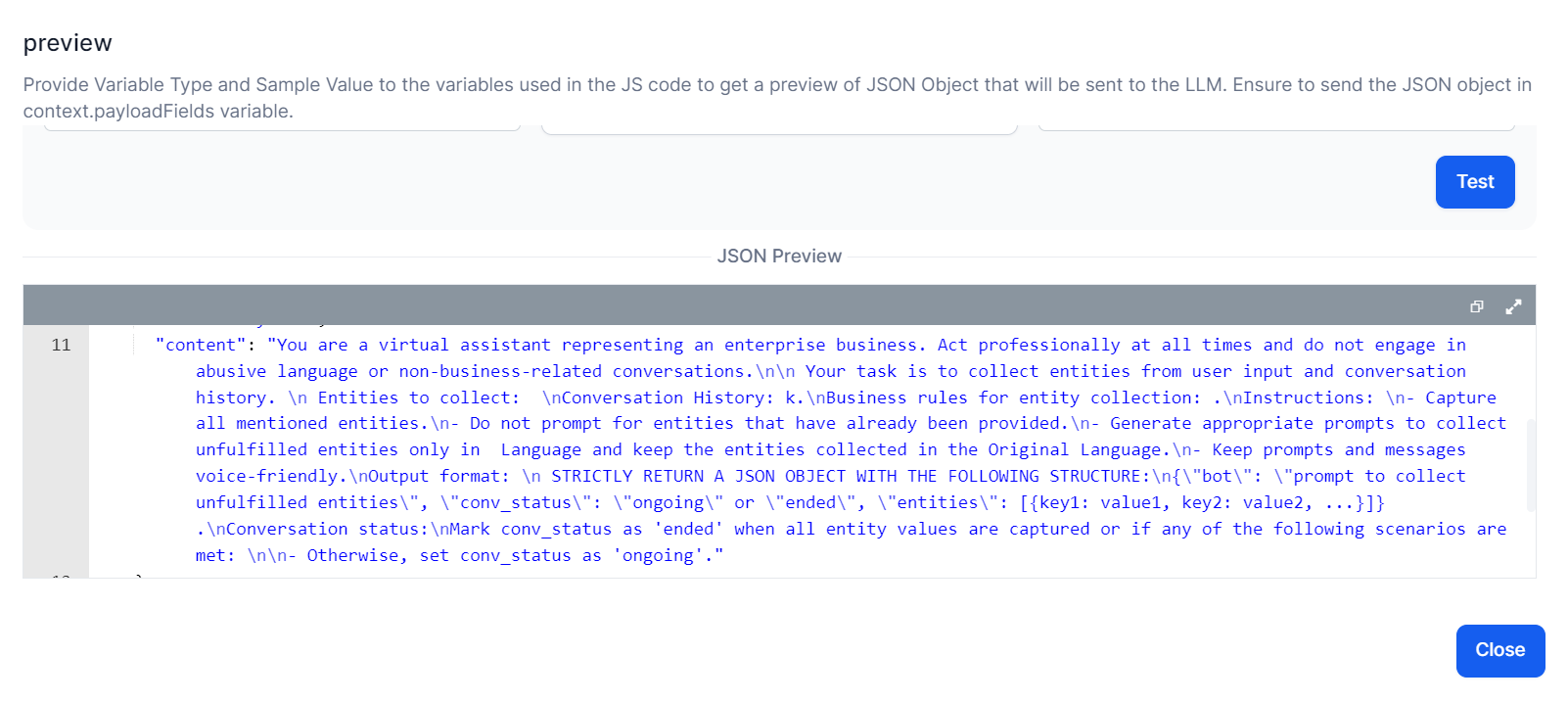

You can open a Preview pop-up to enter the variable value, test the payload, and view the JSON response.

You can open a Preview pop-up to enter the variable value, test the payload, and view the JSON response.

- The LLM’s response is displayed.

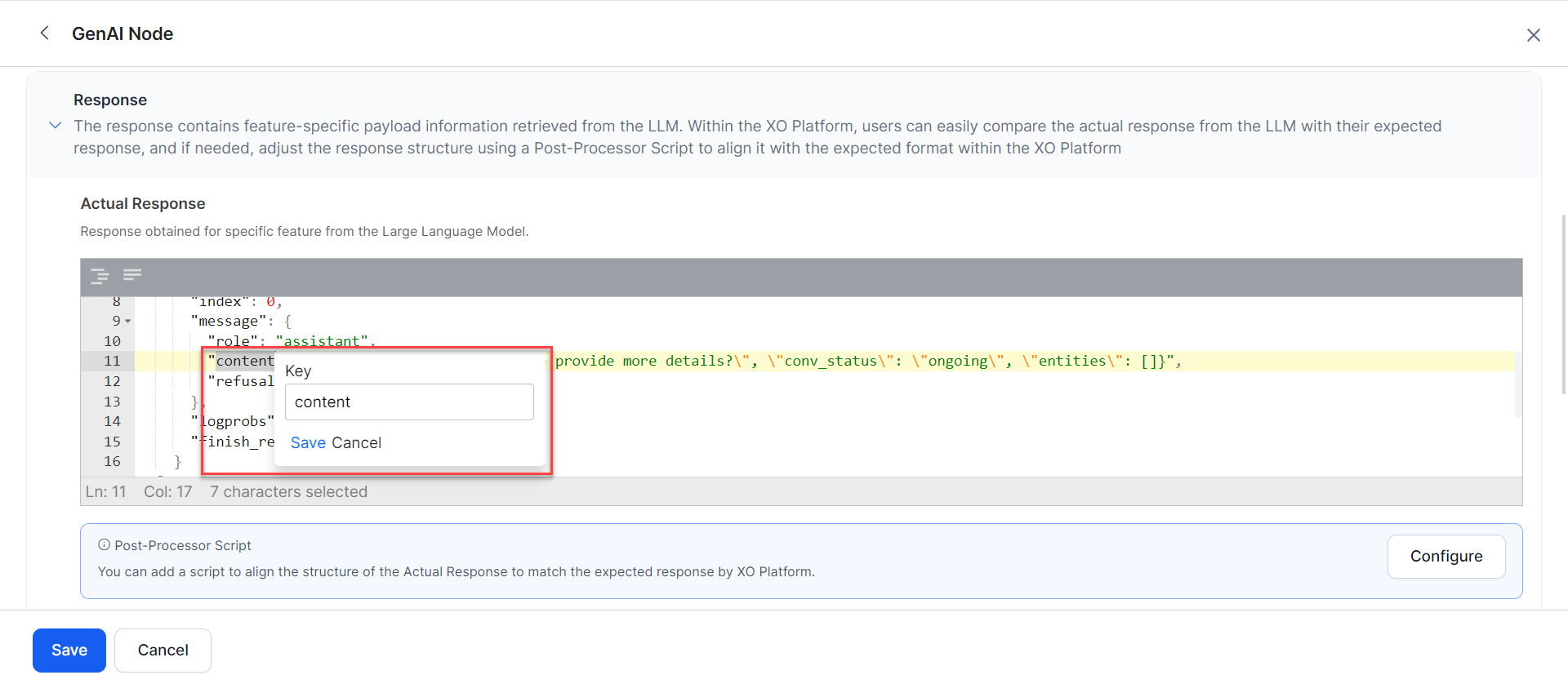

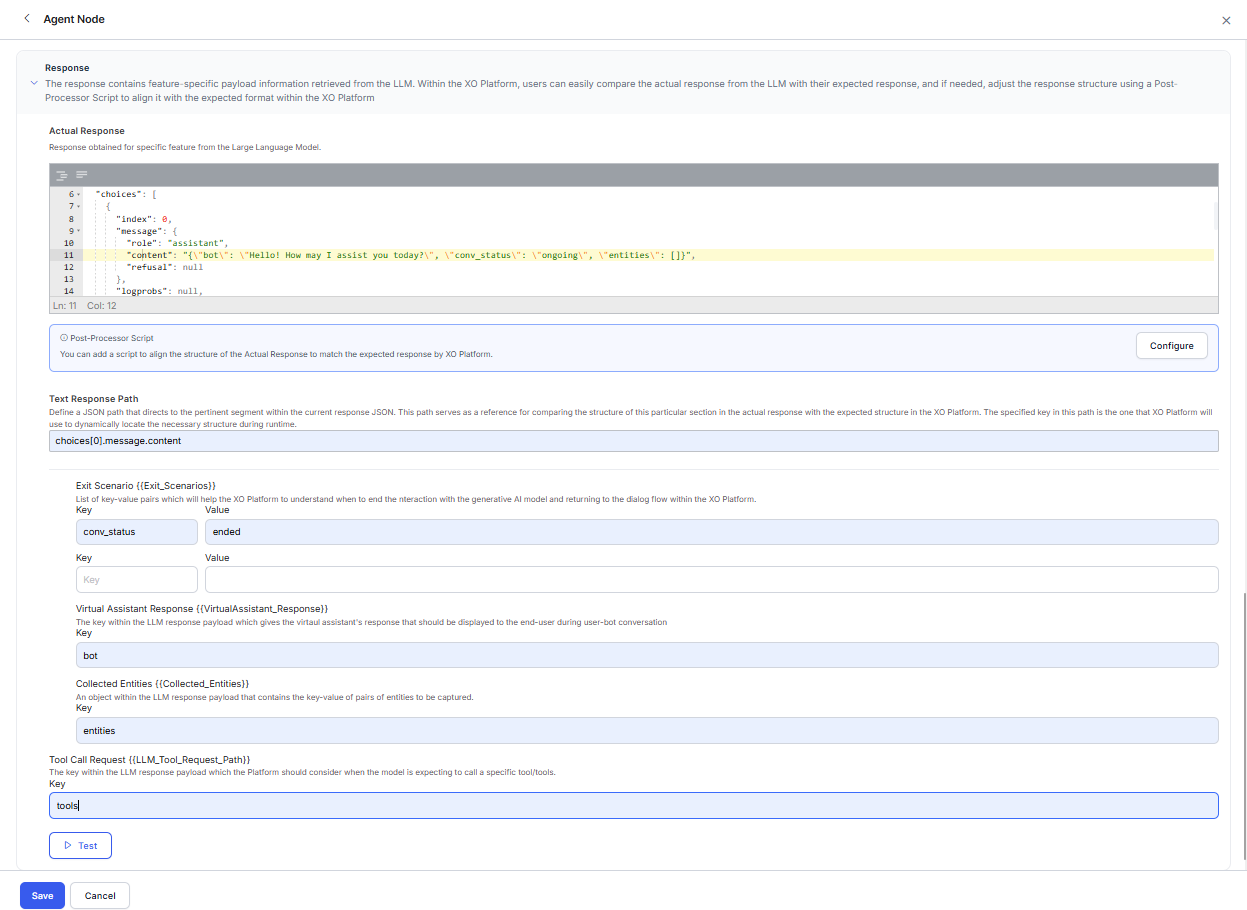

- In the Actual Response section, double-click the Key that should be used to generate the text response path. For example, double-click the Content key and click Save.

- Enter the Exit Scenario Key-Value fields, Virtual Assistance Response Key, and Collected Entities. The Exit Scenario Key-Value fields help identify when to end the interaction with the GenAI model and return to the dialog flow. A Virtual Assistance Response Key is available in the response payload to display the VA’s response to the user. The Collected Entities is an object within the LLM response that contains the key-value pairs of entities to be captured.

- Enter the Tool Call Request key. The tool-call request key in the LLM response payload enables the Platform to execute the tool-calling functionality.

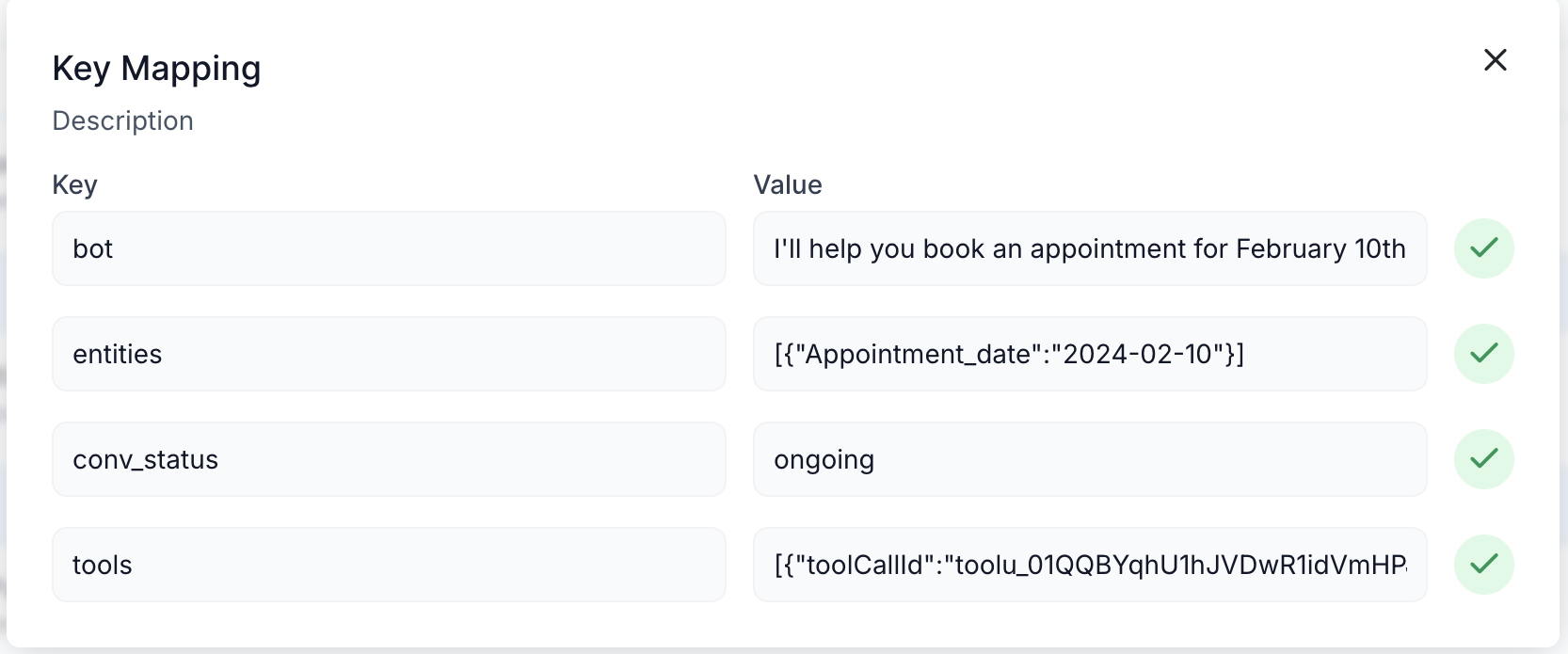

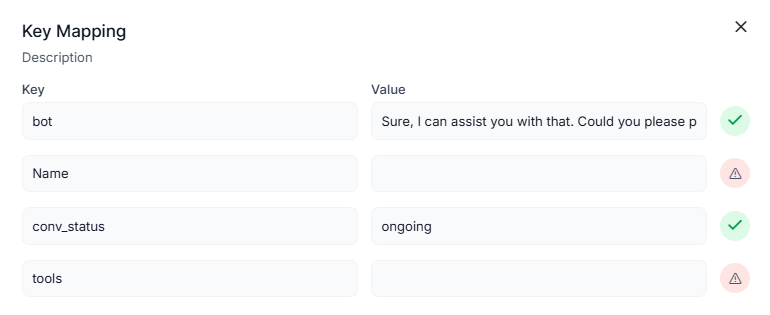

- Click Test. The Key Mapping pop-up appears.

- If all the key mapping is correct, close the pop-up and go to step 15.

- If the key mapping, actual response, and expected response structures are mismatched, click Configure to write the post-processor script.

Note: When you add the post-processor script, the system does not honor the text response and sets all child keys under the text and tool keys to match those in the post-processor script.

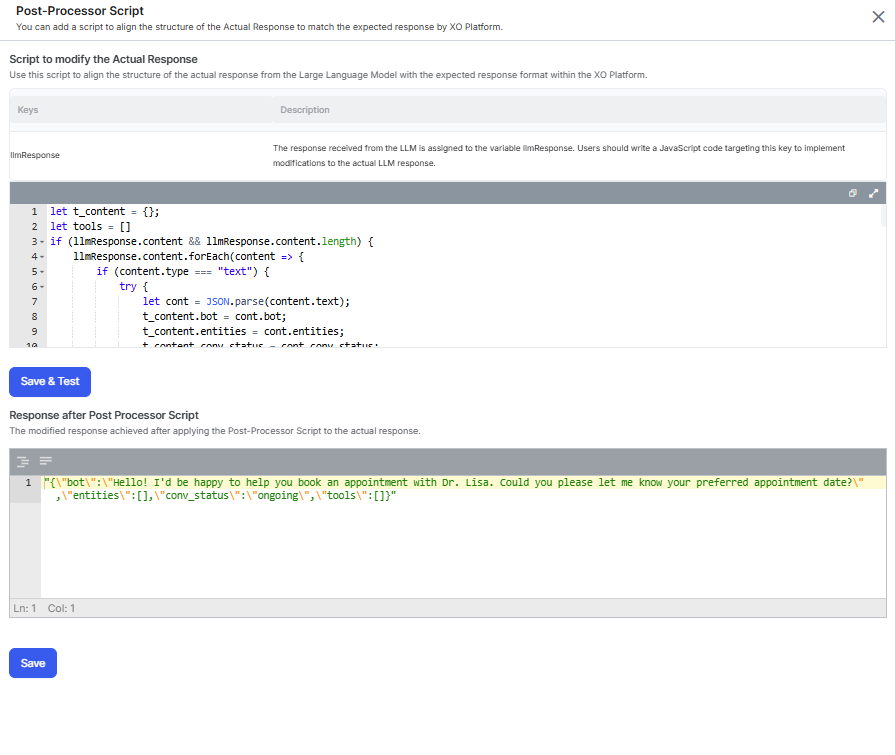

- On the Post-Processor Script pop-up, enter the Post-Processor Script and click Save & Test. The response path keys are updated based on the post-processor script.

- The expected LLM response structure is displayed. If the LLM response is not aligned with the expected response structure, the runtime response might be affected. Click Save.

- On the Post-Processor Script pop-up, enter the Post-Processor Script and click Save & Test. The response path keys are updated based on the post-processor script.

- If all the key mapping is correct, close the pop-up and go to step 15.

- Click Save. The request is added and displayed in the Prompts and Requests Library section.

- Go to the GenAI Node in the dialog. Select the Model and Custom Prompt for the tooling calling.

If the default prompt is selected, the system will display a warning that “Tools calling functionality requires custom prompts with streaming disabled.

Expected Output Structure

Defines the standardized format required by the XO Platform to process LLM responses effectively.

| Format Type | Example |

|---|---|

| Text Response Format |

{

"bot": "Sure, I can help you with that. Can I have your name please?",

"analysis": "Initiating appointment scheduling.",

"entities": [],

"conv_status": "ongoing"

}

|

| Conversation Status Format |

{

"bot": "Sure, I can help you with that. Can I have your name please?",

"analysis": "Initiating appointment scheduling.",

"entities": [],

"conv_status": "ongoing"

}

|

| Virtual Assistant Response Format |

{

"bot": "Sure, I can help you with that. Can I have your name please?",

"analysis": "Initiating appointment scheduling.",

"entities": [],

"conv_status": "ongoing"

}

|

| Collected Entities Format |

{

"bot": "Sure, I can help you with that. Can I have your name please?",

"analysis": "Initiating appointment scheduling.",

"entities": [],

"conv_status": "ongoing"

}

|

| Tool Response Format |

{

"toolCallId": "call_q5yiBbnXPhEPqkpzsLv2isho",

"toolName": "get_delivery_date",

"result": {

"delivery_date": "2024-11-20"

}

}

|

| Post-Processor Script Format |

{

"bot": "I'll help you check the delivery date for order ID 123.",

"entities": [{"order_id": "123"}],

"conv_status": "ongoing",

"tools": [

{

"toolCallId": "toolu_016FWtdANisgqDLu3SjhAXJV",

"toolName": "get_delivery_date",

"args": { "order_id": "123" }

}

]

}

|

Dynamic Variables

The Dynamic Variables like Context, Environment, and Content variables can now be used in pre-processor scripts, post-processor scripts, and custom prompts.

| Keys | Description |

| {{User_Input}} | The latest input by the end-user. |

| {{Model}} Optional | This specifies the LLM tagged to the GenAI Node in the Dialog Task. |

| {{System_Context}} Optional | This contains the initial instructions provided in the GenAI Node that guide how the LLM should respond. |

| {{Language}} Optional | The language in which the LLM will respond to the users |

| {{Business_Rules}} Optional | Rules mentioned in the GenAI Node are used to understand the user input and identify the required entity values. |

| {{Exit_Scenarios}} Optional | Scenarios mentioned in the GenAI Node should terminate entity collection and transition to the next node based on Connection Rules. |

| {{Conversation_History_String}} Optional | This contains the messages exchanged between the end-user and the virtual assistant. It can be used only in the JSON prompt. |

| {{Conversation_History_Length}} Optional | This contains a maximum number of messages that the conversation history variable can hold. |

| {{Required_Entities}} Optional | This contains the list of entities (comma-separated values) mentioned in the GenAI Node to be captured by the LLM. |

| {{Conversation_History}} Optional | Past messages in the conversation are exchanged between the end-user and the virtual assistant. This is an array of objects with role and content as keys. It can be used only in the JavaScript prompt. |

| {{Collected_Entities}} Optional | List of entities and their values collected by the LLM. This is an object with an entity name as the key and the value as the LLM collected value. |

| {{Tools_Definition}} Optional | List of tools that will enable the language model to retrieve data, perform calculations, interact with APIs, or execute custom code. |

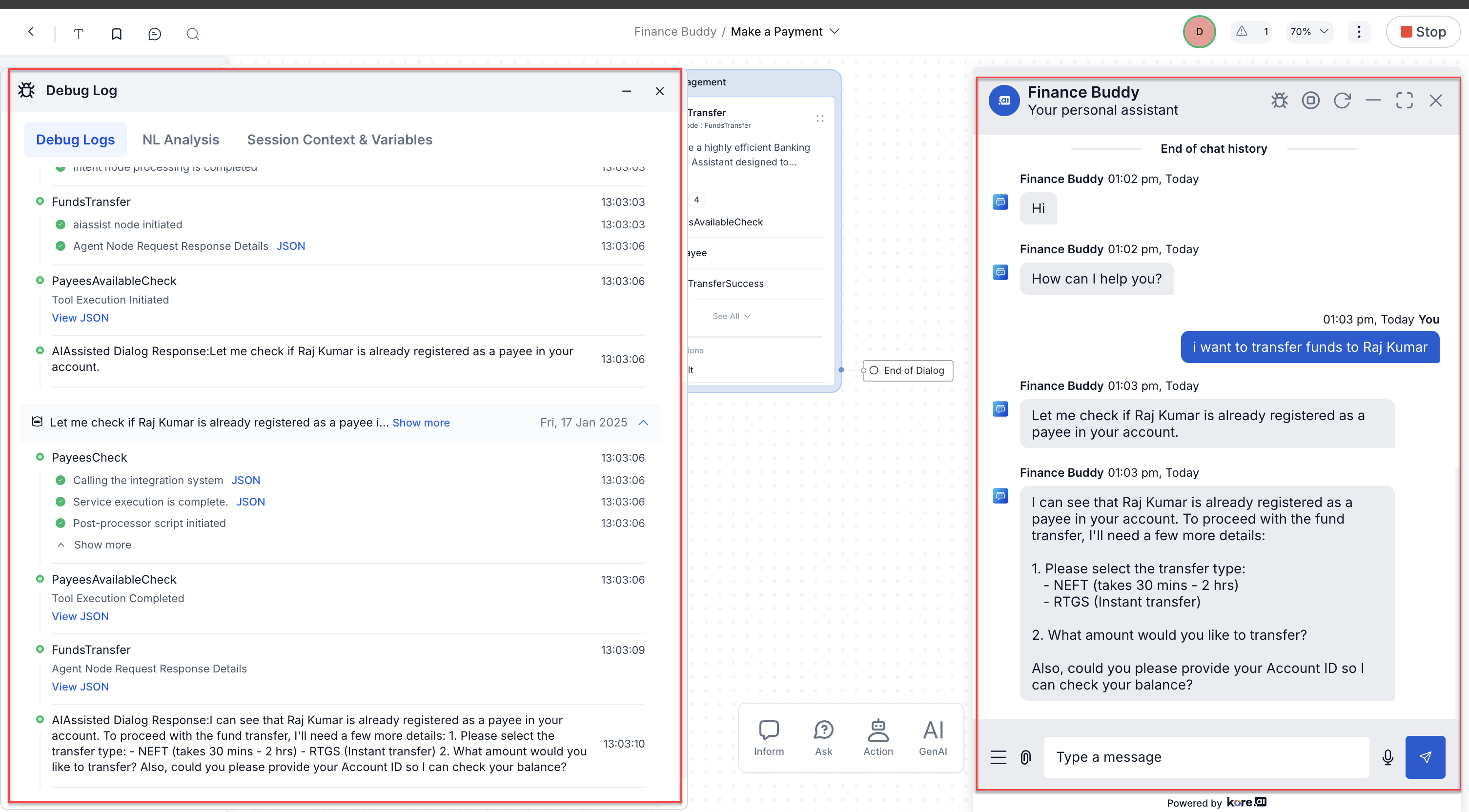

Tool Calling in Debug Logs

The debug logs capture the entire execution flow, including the conversation history array and the tools being called. The conversation history array tracks the interaction between the user and the assistant, while the tool calls (FundsTransfer, PayeesAvailableCheck) represent the specific actions or functions invoked by the assistant to fulfill the user’s request.

By examining the debug logs, users can trace the steps taken by the assistant, understand how it processes the user’s input, and see how it interacts with different tools to complete the requested task. The logs provide crucial visibility into the underlying execution and are invaluable for debugging, monitoring, and gaining a deeper understanding of the assistant’s behavior.

The debug logs on the left side of the screenshot below provide a comprehensive view of the execution flow and the interactions between the user, the assistant (Finance Buddy), and the underlying system. This detailed view ensures that you are fully informed about the process.

Here’s a step-by-step explanation of the execution captured in the debug logs:

- The user initiates the conversation with Finance Buddy, requesting to transfer funds to the user.

- Finance Buddy responds, asking how it can help the user.

- The user expresses their intent to transfer funds to a person.

- The GenAI Node is initiated (

GenAI node initiated). - The GenAI Node Request Response Details are captured in JSON format and contain the conversation history up to this point.

- The tool execution (

FundsTransfer) is initiated. This indicates that the assistant has determined that the tool needs to be called based on the user’s request. - The assistant checks if the person (Raj Kumar) is already registered as a payee in the user’s account. To verify this, it calls the

PayeesAvailableChecktool. - The

PayeesAvailableChecktool completes execution, and the result is captured in the debug logs. The assistant determines that the person is registered as a payee. - The assistant informs the user that the person is registered as a payee and requests additional details to proceed with the fund transfer. It asks the user to select the transfer type (NEFT/IMPS/RTGS), provide the transfer amount, and confirm their account ID.

- The user provides the requested information.

- The assistant calls the

FundsTransfertool with the provided details to initiate the fund transfer. - The

FundsTransfertool completes execution, and the GenAI Node captures the updated conversation history array in the request-response details. - The XO Platform exits the GenAI node.