Once you have built and trained your bot, the most important question that arises is how good is your bot’s learning model? So, evaluating your bot’s performance is important to delineate how good your bot understands the user utterances.

The Batch Testing feature helps you discern the ability of your bot to correctly recognize the expected intents and entities from a given set of utterances. This involves the execution of a series of tests to get a detailed statistical analysis and gauge the performance of your bot’s ML model.

The Batch Testing feature also supports the Zero-shot Model feature for intent detection, enabling performance evaluation even without pre‑trained intent‑based training utterances. To use it, ensure that the Zero-shot ML Model feature is enabled and the Machine Learning Network Type is Zero-shot model. To know about the support LLMs, see Model Feature Matrix.

Note: The Batch Testing dashboard displays the summary of test coverage, performance, and training recommendations for multiple test suites based on validations of the intent identification capabilities of your NLU model. The Health and Monitoring dashboard is a one-stop place that displays this information for the individual test suite(s) the user selects. Learn More.

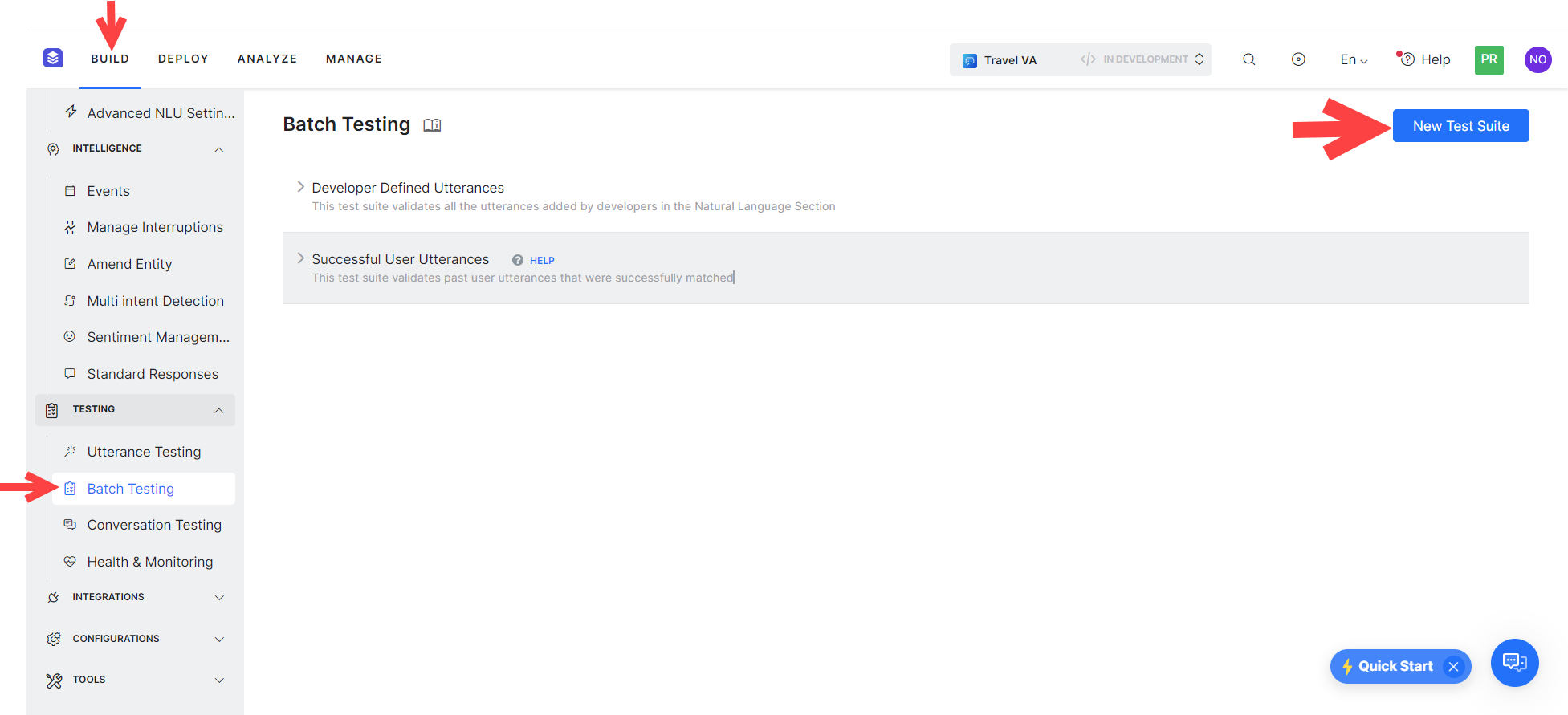

To conduct a batch test, you can use predefined test suites available in the builder or create your own custom test suites. Based on your requirement, the test suites can be run to view the desired results. This option can be accessed from the Testing -> Batch Testing option from the left navigation menu.

Best Practices

- An optimal approach to bot NLP training is to first create a test suite of most of the use cases(user utterances) that the bot needs to identify, run it against the model and start training for the ones that failed.

- Create/update batch testing modules for high usage utterances.

- Publish the trained model only after detailed testing.

- When naming the intent, ensure that the name is relatively short (3-5 words) and does not have special characters or words from the Stop Wordlist. Try to ensure the intent name is close to what the users request in their utterance.

- Batch Test executions do not consider the context of the user. Hence you might see some False Negatives in the test results which in fact are True Positives in the actual bot when the context is taken into consideration.

- The ‘count’ in Batch Test results refers to the ‘unique assertion’ statements and not necessarily the number of the rows in the CSV file. Batch tests use a rule to validate the unique assertions based on the ‘utterance’ in consecutive rows. If two consecutive rows have same utterances and different entity values, the XO platform considers it as one assertion statement which accepts both the entity values.

- Batch testing uses the original user input for intent scoring instead of the spell-corrected input.

Managing Test Suites

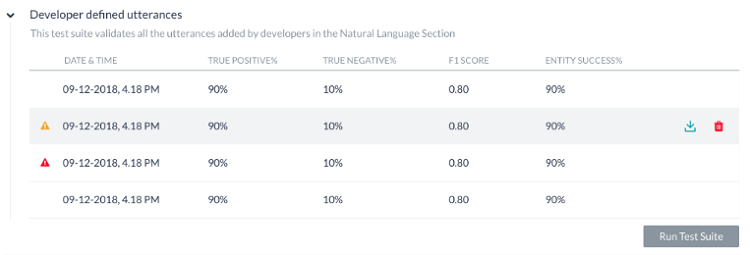

Kore.ai provides a few out-of-the-box Test Suites to perform batch testing. ‘Developer defined utterances’ and ‘Successful user utterances’ are the built-in test suites that can be run to perform Batch Testing. You can also create a New Test Suite for testing a custom set of utterances.

Developer defined utterances

This test suite validates the utterances that have been previously added and trained by the developer from Machine Learning Utterances screen. Using this test suite would mean testing collectively the entire set of utterances that a developer has added for all tasks of the bot.

Successful user utterances

This test suite includes all the end-user utterances that have successfully matched one or more intents and the corresponding task is fully executed. You can also find these utterances from the ‘Intent found’ section of the Analyze module.

Adding a New Test Suite

To add a new test suite, follow the steps below:

-

- Go to Build > Testing > Batch Testing, and click New Test Suite.

- In the New test Suite window, add a name and provide a description.

- Next, choose how to add test cases: either manually or by uploading a test case file.

- Selecting Add Manually lets you add test cases manually or use LLM and Generative AI to generate test cases automatically.

- Selecting Upload Test Cases File lets you upload a file containing test cases.

Adding Test Cases Manually

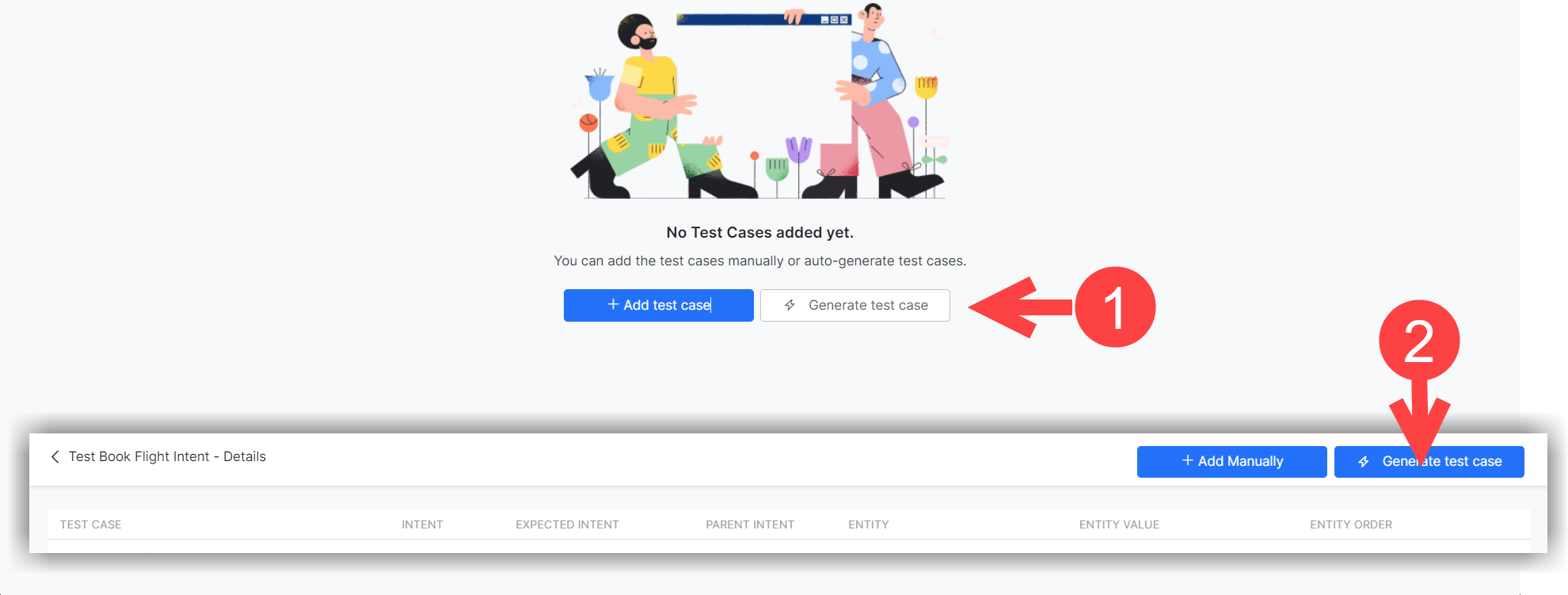

Once you create a test suite to which you have chosen to add test cases manually, you can either add them manually or generate them automatically.

In the Test Cases page, click the +Add Test Case.

In the Add Test Case window, select or enter information in the following fields:

- The Intent that corresponds to the Dialog Task you want to test. You can add/tag one or more intents to a test utterance.

You can add up to three intents (Dialog, FAQ, or Small Talk) for a test utterance. It helps in scenarios where ambiguity is by-design and should be considered as True Positive. For example, for the utterance “I need help to book a flight ticket,” the expected intent can be either “Book flight” (Dialog) or “How to book a flight?” (FAQ).

- The Parent Intent within that task.

- Test Utterances: You can add multiple utterances, each on a new line.

- The Entity Order: Select one from the available options, depending on the intent you are working with. You can only select one item. The Entity Order selection is not available if you have added multiple intents.

One Intent for a Test Utterance

Multiple Intents for a Test Utterance

- Click Save when ready.

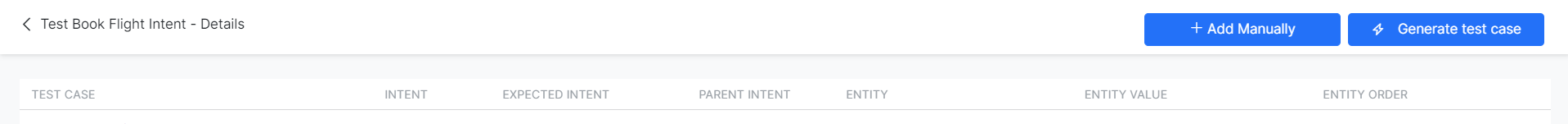

- Once added, your test cases are listed and ready to run. You can use the Add Manually button at the top of the list to add more test cases. The option to Generate Test cases only displays if you have enabled LLM and Generative AI for the VA.

Generating Test Cases Automatically

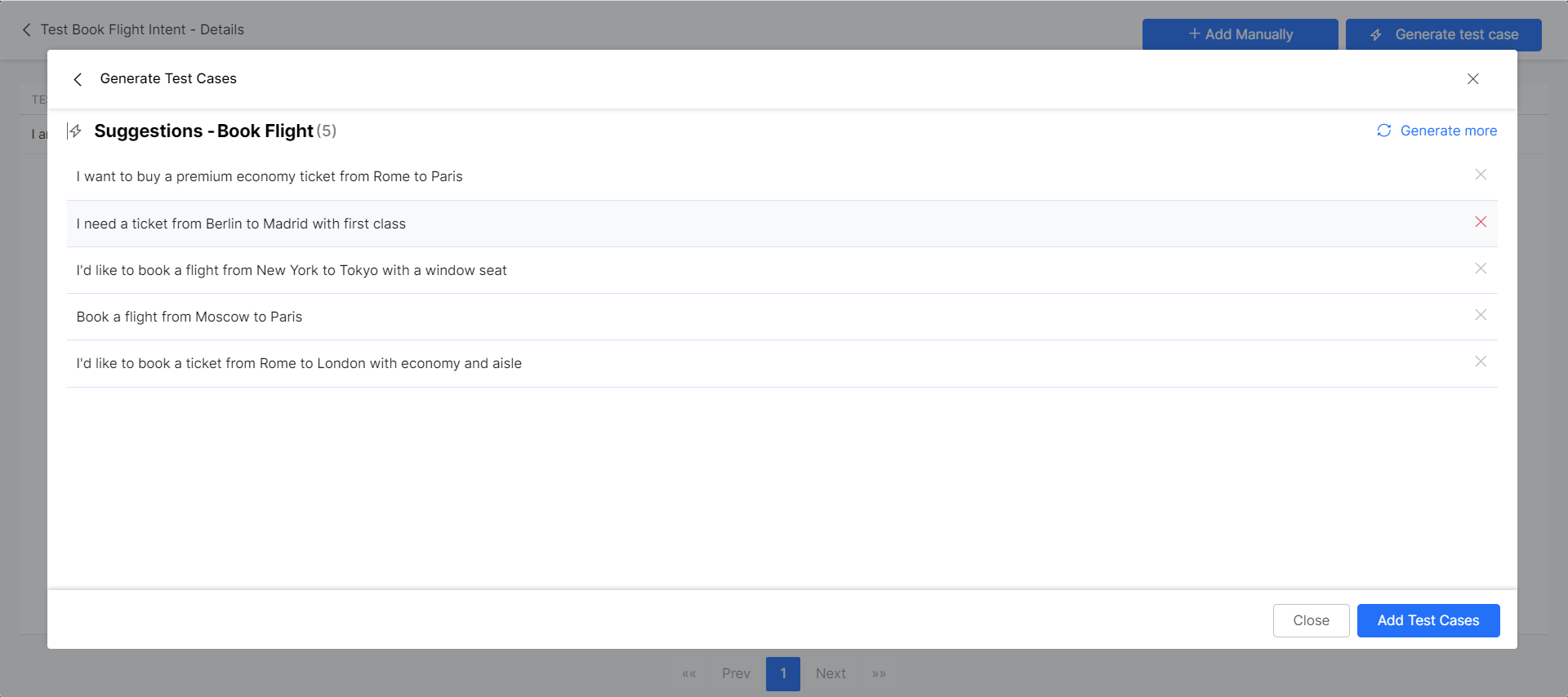

You can automatically generate batch test cases if you have enabled LLM and Generative AI for your Virtual Assistant. You can access the feature either right after creating a new test case, as shown above, or from the test cases list.

Steps to Generate Test Cases

To generate test cases automatically, follow the steps below:

- Click Generate Test Cases from any of the two areas mentioned above.

- In the Generate Test Case window, select the Dialog Task you want to test.

- Click Generate. Wait for a few moments until the generation completes.

- Once test cases are generated, you can reject some of them. If required, click Generate more to get more suggestions.

- Click Add Test Cases when ready.

Once you add the test cases, they are listed within your test suite, along with any other cases you might have added manually or generated in the past. You can continue adding test cases to the suite using these two methods.

Adding a Test Suite by Uploading a Test Cases File

If you Upload a Test Cases File, you can import an array of test utterances, also known as a Dataset at once in a batch file. The Dataset file must in a CSV or JSON format and can have a maximum of 1000 utterances. You can download the sample CSV or JSON file formats while creating the test suite.

Important Tip: For a Universal Bot, to create a test suite, you can add multiple intents in the CSV/JSON file using the upload method. The format includes the intents and the corresponding bot names as follows:

{

“input”: “Utterance”,

“intent”: “Intent 1|Intent 2|Intent 3”,

“botName”: “Bot 1|Bot 2|Bot 3”

}

Example

{

“input”: “Need to check the multibot function”,

“intent”: “Age Predictor|IVRnode|Check Rephase”,

“botName”: “Check Bot|XOP211|Check Rephase”

}

The ability to create test suites manually is not yet available for universal bots.

JSON Format for Test Suite

The JSON format for creating custom suites allows you to define an array of test cases where each test case should consist of an utterance to be tested, the intent against which the utterance to be tested, and optionally define the list of expected entities to be determined from the utterance. If expected intent is a child intent, then you can also include the parent intent to be considered.

The sample code is given below:

{

"testCases": [

{

"input": "Send 200 dollars to Leonardo",

"intent": "Transfer Funds",

"entities": [

{

"entityValue": "200 USD",

"entityName": "TransferAmount"

},

{

"entityValue": "Leonardo",

"entityName": "PayeeName"

}

],

"entityOrder":["TransferAmount", "PayeeName"]

},

{

"input": "What is the balance in my checking account",

"intent": "Show Balance",

"parentIntent": "Transfer Funds"

},

{

"input": "Repeat this transfer every month",

"intent": "Setup Auto Pay",

"parentIntent": "Transfer Funds"

},

{

"input": "Show my past 20 transactions",

"intent": "Show Account Statement",

"entities": [

{

"entityValue": "20",

"entityName": "HistorySize"

}

]

},

{

"input": "Pay my credit card dues",

"intent": "Pay Bill"

},

{

"input": "I need to pay my monthly credit card bill",

"intent": "Pay Bill | Setup Auto Pay | Transfer Funds"

},

{

"input": "Open an account for me",

"intent": "Open Account | How to Open Account?"

},

{

"input": "looks like something is wrong in my statement",

"intent":"trait: Account Statement||Issue||Account Type"

},

{

"input": "How are you doing today",

"intent":"emohowzit"

},

{

"input": "Thank you",

"intent":"thank~you"

},

{

"input": "I understand",

"intent":"i_understand"

},

{

"input": "That's great",

"intent":"~positiveackwords"

},

{

"input": "How many people can you speak to at once?",

"intent":"How many people can you speak to at once?"

},

{

"input": "That's sweet",

"intent":"That's sweet",

"parentIntent": "How many people can you speak to at once?"

},

{

"input": "What else can you do?",

"intent":" What else can you do?",

"parentIntent": "How many people can you speak to at once? >> That's sweet "

},

{

"input": "What else can you do?",

"intent":"What else"

},

{

"input": "What's your age?",

"intent":"How old are you?"

},

{

"input": "Who created you?",

"intent":"Who made you?"

},

{

"input": "How many people talk to you?",

"intent":"How many people can you speak to at once?"

},

{

"input": "What's your name?",

"intent":"What is your name?"

},

{

"input": "What are you called?",

"intent":"What ~you called?"

}

]

}

- For Entities that have the Multi-Item enabled, values need to be given as:

entity1||entity2 - Composite Entities require passing values in the following format:

component1name:entityValue|component2name:entityValue2The sample code is given below:{ "testCases": [ { "input": "Add cart to Apples , Grapes", "intent": "Add to cart", "entities": [ { "entityValue": "Apples||Grapes", "entityName": "LOV" } ] }, { "input": "I booked a ticket from Hyderabad on July 6th, 2018 and it costed me Rs. 1200", "intent": "Book a flight", “entities”: [ { "entityValue": "City:Hyderabad|Date:2018-07-06}Curr:1200 INR", "entityName": "Composite5", } ] } } - The order in which the entities are to be extracted can be given as:

"entityOrder":["TransferAmount", "PayeeName"]. If the order is not provided or partially provided, the platform determines the shortest route covering all the entities as the default order.

| Property Name | Type | Description |

|---|---|---|

| Test Cases | Array | Consists of the following:

|

| input | String | End-user Utterance. Note that if any of the utterances are beyond 3000 characters, the file upload would be rejected. |

| intent | String | Determine the objective of an end-user utterance (can be task name or primary question in case of FAQ test case)

Post-release 7.3, this property can be used to define traits to be identified against this utterance by using the prefix “trait” for example, Trait: Trait Name1|| Trait Name2||Trait Name3 Post-release 8.0, this property can include the expected Small Talk pattern. |

| entities | Array [Optional] | Consists of an array of entities to be determined from the input sentence:

|

| entityValue | String | Value of the entity expected to be determined from the utterance. You can define the expected Entity Value as a string or use a Regular Expression. For the purpose of Batch Testing, the platform flattens all entity values into string formats. Please refer to the Entity Format Conversions section for more information. |

| entityName | String | Name of the entity expected to be determined from the utterance |

| entityOrder

(ver7.1 onwards) |

Array [Optional] | An array of entity names specifying the order in which the entities are to be extracted.

If the order is not provided or partially provided, the platform determines the shortest route covering all the entities as the default order. |

| parentIntent | String [Optional] | Define parent intent to be considered if the intent is a sub-intent. In the case of Small Talk, this field should be populated when the Small Talk is contextual follow-up intent; in case of multi-level contextual intent the parent intents should be separated by the delimiter || |

CSV Format for Test Suite

CSV format for creating custom suites allows you to define test cases as records in CSV file where each test case should consist of an utterance to be tested, the intent against which the utterance to be tested, and optionally define entities to be determined from the utterance. If your test case requires more than one entity to be detected from a sentence, then you have to include an extra row for each of the additional entities to be detected. If expected intent is a child intent, then you can also include the parent intent to be considered.

input,intent,parentIntent,entityName,entityValue,entityOrder Send 200 dollars to Leonardo, Transfer Funds,,TransferAmount,200 USD,, ,,,PayeeName,Leonardo, TransferAmount>PayeeName What is the balance in my checking account,Show Balance, Transfer Funds,,, Repeat this transfer every month, Setup Auto Pay, Transfer Funds,,, Show my past 20 transactions, Show Account Statement,,HistorySize,20,, Pay my credit card dues,Pay Bill,,,,,

- For Entities that have the Multi-Item enabled values need to be given as:

entity1||entity2 - Composite Entities require the values in the following format:

component1name:entityValue|component2name:entityValue2input,intent,parentIntent,entityName,entityValue “Add cart to Apples , Grapes”,Add to cart,LOV,Apples||Grapes,, “I booked a ticket from hyderabad on July 6th, 2018 and it cost me Rs. 1200”,Composite Test,Composite55,City5:Hyderabad|Date5:2018-07-06”|Curr5:1200 INR

- The order of extraction of entity value can be mentioned in the following format:

entity3>entity4>entity1. If the order is not provided or partially provided, the platform determines the shortest route covering all the entities as the default order.

| Column Name | Type | Description |

|---|---|---|

| input | String | Utterance given by the end-user. Note that if any of the utterances are beyond 3000 characters, the file upload would be rejected. |

| intent | String | Determine the objective of an end-user utterance (can be task name or primary question in case of FAQ test case)

Post release 7.3, this property can be used to define traits to be identified against this utterance by using the prefix “trait” for example, Trait: Trait Name1|| Trait Name2||Trait Name3 Post-release 8.0, this property can include the expected Small Talk pattern. |

| parentIntent | String [Optional] | Define parent intent to be considered if the intent is a sub-intent In the case of Small Talk, this field should be populated when the Small Talk is contextual follow-up intent and the intent would be matched assuming that the follow-up intent criteria is met; in the case of multi-level contextual intent the parent intents should be separated by the delimiter || |

| entityValue | String [Optional] | Value of the entity expected to be determined from the utterance. You can define the expected Entity Value as a string or use a Regular Expression. For the purpose of Batch Testing, the platform flattens all entity values into string formats. Please refer to the Entity Format Conversions section for more information. |

| entityName | String [Optional] | Name of the entity expected to be determined from the utterance |

| entityOrder

(ver7.1 onwards) |

Array [Optional] | An array of entity names separated by > specifying the order in which the entities are to be extracted.

If the order is not provided or partially provided, the platform defines the implicit order to process first the NER and pattern entities and then the remaining entities. |

Entity Format Conversions

| Entity Type | Sample Entity ValueType | Value in Flat Format | Order of Keys |

|---|---|---|---|

| Address | P.O. Box 3700 Eureka, CA 95502 | P.O. Box 3700 Eureka, CA 95502 | |

| Airport | { “IATA”: “IAD”, “AirportName”: “Washington Dulles International Airport”, “City”: “Washington D.C.”, “CityLocal”: “Washington”, “ICAO”: “KIAD”, “Latitude”: “38.94”, “Longitude”: “-77.46” } | Washington Dulles International Airport IAD KIAD 38.94 -77.46 Washington D.C. Washington | AirportName IATA ICAO Latitude Longitude City CityLocal |

| City | Washington | Washington | |

| Country | { “alpha3”: “IND”, “alpha2”: “IN”, “localName”: “India”, “shortName”: “India”, “numericalCode”: 356} | IN IND 356 India India | alpha2 alpha3 numericalCode localName shortName |

| Company or Organization Name | Kore.ai | Kore.ai | |

| Color | Blue | Blue | |

| Currency | [{ “code”: “USD”, “amount”: 10 }] | 10 USD | amount code |

| Date | 2018-10-25 | 2018-10-25 | |

| Date Period | { “fromDate”: “2018-11-01”, “toDate”: “2018-11-30” } | 2018-11-01 2018-11-30 | fromDate toDate |

| Date Time | 2018-10-24T13:03:03+05:30 | 2018-10-24T13:03:03+05:30 | |

| Description | Sample Description | Sample Description | |

| user1@emaildomain.com | user1@emaildomain.com | ||

| List of Items(Enumerated) | Apple | Apple | |

| List of Items(Lookup) | Apple | Apple | |

| Location | { “formatted_address”: “8529 Southpark Cir #100, Orlando, FL 32819, USA”, “lat”: 28.439148,”lng”: -81.423733 } | 8529 Southpark Cir #100, Orlando, FL 32819, USA 28.439148 -81.423733 | formatted_address lat lng |

| Number | 100 | 100 | |

| Person Name | Peter Pan | Peter Pan | |

| Percentage | 0.25 | 0.25 | |

| Phone Number | +914042528888 | +914042528888 | |

| Quantity | { “unit”: “meter”, “amount”: 16093.4, “type”: “length”, “source”: “10 miles” } | 16093.4 meter length 10 miles | amount unit type source |

| String | Sample String | Sample String | |

| Time | T13:15:55+05:30 | T13:15:55+05:30 | |

| Time Zone | -04:00 | -04:00 | |

| URL | https://kore.ai | https://kore.ai | |

| Zip Code | 32819 | 32819 |

Importing a Dataset file

- Click New Test Suite on the batch testing page. A dialog box to import the dataset appears.

- Enter a Name, Description, and choose a Dataset Type in the respective boxes for your dataset file.

- To import the Dataset file, click Choose File to locate and select a JSON or CSV file containing the utterances as per the Dataset Type selected.

- Click Create. The dataset file is displayed as an option to run the test suite on the Batch Testing page:

Running Test Suites

The following steps guide you on how to run a batch test on your bot and get a detailed analytical report on the utterances based on the test results. To get started, go to Build > Testing > Batch Testing.

To run a Test Suite, follow the steps below:

- Click the desired test suite name in the Batch Testing window.

Note that Developer Defined Utterances and Successful User Utterances are default test suites to validate user utterances through Batch Testing. - Select In Development to run batch tests on test suites for only the in-development version of the Virtual Assistant(s). Alternatively, select Published to run batch tests on test suites for only the published version of the Virtual Assistant(s).

- Click Run Test Suite to initiate the batch test execution.

Execution of Newly Created Batch Test Suites

Once you create a new test batch test suite, by default, the system automatically initiates test run execution for both In-development and Published VA versions. This makes it convenient to assess test results while the VA is in development and once a VA is published following a production release.

Add Notes and Comments to Batch Testing

You can add notes or reasons to record the purpose or changes made for each test run when executing a batch test.

Steps to Add and Update a Batch Test Note

- Click Run Test Suite to start batch test execution.

- When a batch test is running or completed, click the Notes icon for the required test run.

- Type your comment in the text window.

Add a New Note

Note: You can add up to 1024 characters.

Update an Existing Note

- After the test run is complete, you can click the Notes icon to view the new or updated comment.

Cancel an Ongoing Batch Test Execution

You can cancel an ongoing batch test execution in either Published or In-Development mode. This feature allows you to make changes, intervene, or start another batch test suite execution without waiting for the ongoing test run to complete.

Steps to Cancel an Ongoing Batch Test Execution:

- Navigate to Build > Testing > Batch Testing on the Bot Builder.

- When the required test suite is running, click the Cancel icon.

- Click Confirm in the confirmation dialog.

The test execution is canceled, and a confirmation message is displayed.

Important Considerations

- For a canceled batch test suite, you cannot download the test results CSV file.

- An orange Warning icon indicates that the test suite execution is canceled.

- A red Warning icon indicates a failed batch test due to an unexpected technical error.

Error Scenarios

You cannot cancel a batch test run in the following scenarios:

- When another batch test execution/cancelation is in progress.

Solution: Wait for one test suite execution or cancellation to complete before initiating the process for another. - When you try to cancel a batch test run in the Published mode without publishing the VA.

Solution: First, train and publish the VA before canceling the batch test run in Published mode.

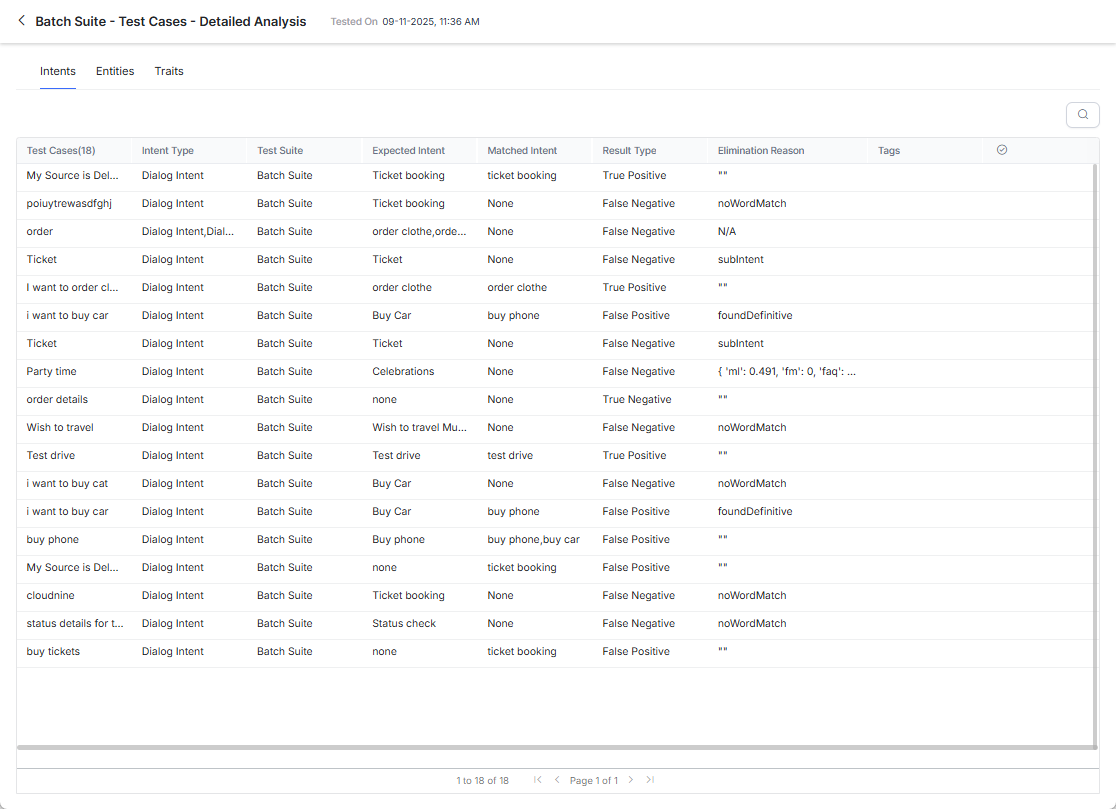

View Test Case Details

Running a test suite will display the results as described below.

Each test run creates a test report record and displays a summary of the test result. The batch test result in the screenshot below includes the following information:

- Last Run Date & Time that displays the date and time of the latest test run.

- F1 Score is the weighted average of Precision and Recall i.e. (2*precision*recall)/(precision+recall).

- Precision is the number of correctly classified utterances divided by the total number of utterances that got classified (correctly or incorrectly) to an existing task ie the ratio of true positives to all classified positives (sum of true and false positives) i.e. TP/(TP+FP).

- Recall is the number of correctly classified utterances divided by the total number of utterances that got classified correctly to any existing task or classified incorrectly as an absence of an existing task ie the ratio of correctly classified utterances to actual matching intents/tasks (sum of true positives and false negatives) i.e. TP/(TP+FN).

- Intent Success % that displays the percentage of correct intent recognition that has resulted from the test.

- Entity Success % that displays the percentage of correct entities recognition that has resulted from the test.

- Version Type identifies the version of the bot against which the test suite was run – development or published.

- There are three possible outcomes from each test run:

- Success – when all records are present in the file are processed

- Success with a warning – when one or more records present in the suite are discarded from detection due to system error

- Failed – when there was a system error and the test could not be resumed post-recovery.

Hovering over the warning/error icon will display a message suggesting the reason.

- Elimination Reason displays why a test failed only when the expected intent does not match the winning intent. The Platform clearly indicates whether the model causes the failure or the Ranking & Resolver (R&R) engine eliminates the intent. The column displays the R&R policy reason when available. Otherwise, it shows the expected intent scores from each engine in this format: FM: [score], ML: [score], FAQ: [score].

The Platform provides the following Elimination Reasons from R&R:- belowDependencyThreshold: Score is below the minimum dependency threshold.

- verbMatchOnly: Only the verb matches in a single-word match.

- entityMatchOnly: Only the entity (number, date, etc.) matches.

- foundDefinitive: A definitive match is found with only possible matches.

- outsideProximity: Score is below the minimum score threshold.

- withinAnotherTask: The task name is found within another task.

- noWordMatch: No word match is found.

- negationIntent: The intent match contains a negation.

- taskNotAvailable: The task is not available in the bot.

- subIntent: The task is a sub-intent.

To view the elimination column, click View Summary for a test case and then click View Test Cases. You can also download the CSV report to see the elimination reason.

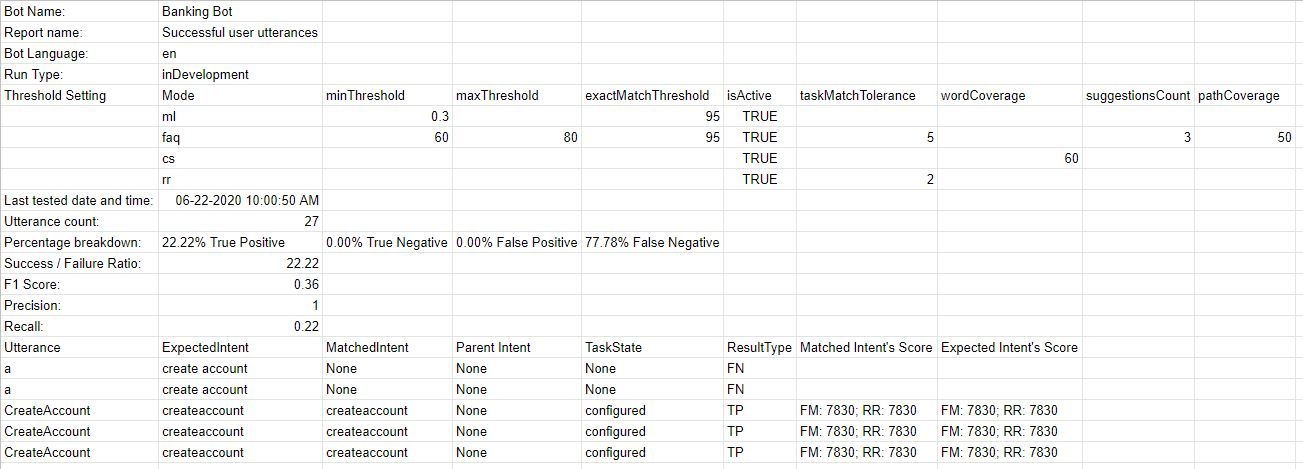

Download a CSV Report

To get a detailed analysis of the test run, click the Download icon to download the test report in CSV format. You have an option to delete the test results if needed. The top section of the report comprises the summary with the following fields:

- Bot Name

- Report name of the test suite

- Bot Language (post 7.3 release)

- Run Type identifies the version of the bot against which the test suite was run – development or published.

- Threshold Setting (post 7.3 release) detailing the NLP thresholds applied when running this test suite, this would be followed by the settings for each of the three NL engines with the following details:

- Mode – ml, faq, or, cs

- minThreshold

- maxThreshold

- exactMatchThreshold

- isActive

- taskMatchTolerance

- wordCoverage

- suggestionsCount

- pathCoverage

- Last Tested: Date of the latest test run for developer-defined utterances.

- Utterance Count: Total number of utterances included in the test run.

- Success/Failure Ratio: Total number of successfully predicted utterances divided by the total count of utterances multiplied by 100.

- True Positive (TP): Percentage of utterances that have correctly matched expected intent.In the case of Small Talk, TP is indicated when the list of expected and actual intents are the same.In the case of Traits, a TP state includes the traits matched over and above the expected matches.

Important: When you tag multiple intents (dialog, FAQ, and small talk) to a test utterance, the test result appears as TP if the actual intents match the expected intents but also include ambiguous intents.

- True Negative (TN): Percentage of utterances that were not expected to match any intent and they did not match. Not applicable to Small Talk.

- False Positive (FP): Percentage of utterances that have matched an unexpected intent. In the case of Small Talk, it would be when the list of expected and actual intents are different.

- False Negative (FN): Percentage of utterances that have not matched expected intent. In the case of Small Talk, it would be when the list of expected Small Talk intent is blank and but the actual Small Talk is mapped to an intent.

The report also provides detailed information on each of the test utterances and the corresponding results.

- Utterances – Utterances used in the corresponding test suite.

- Expected Intent – The intent expected to match for a given utterance, will include trait where applicable with trait prefix

- Matched Intent – The intent that is matched for an utterance during the batch test. This will include matched traits with trait prefix (post 7.3 release). This will include matched Small Talk intents (post 8.0 release).

- Parent Intent – The parent intent is considered for matching an utterance against an intent.

- Task State – The status of the intent or task against which the intent is identified. Possible values include Configured or Published

- Result Type – Result categorized as True Positive or True Negative or False Positive or False Negative

- Entity Name – The name of the entity detected from the utterance.

- Expected EntityValue – The entity value expected to be determined during the batch test.

- Matched EntityValue – The entity value identified from an utterance.

- Entity Result – Result categorized as True or False to indicate whether the expected entity value is the same as the actual entity value.

- Expected Entity Order – entity values from the input file

- Actual Entity Order –

- if the order for all expected entities is provided, then the same is included in this column

- if no order is provided, the system-determined order will be included in the column

- If an order is provided for some entities, then a combination of user-defined order and system-defined order will be included

- Matched Intent’s Score – For False Positives and False Negatives, the confidence scores from FM, ML, and/or KG engines are displayed for the matched intent from the utterance. Note that the scores are given only if the engine detects the intent, which means that you may not see the scores from all three engines at all times.

- Expected Intent’s Score – For False Positives, the confidence scores for the intent expected to match for the given utterance are given. Again, the score will be given by the engines detecting the intent.

-

Elimination Info – displays why a test failed only when the expected intent does not match the winning intent. The Platform clearly indicates whether the model causes the failure or the Ranking & Resolver (R&R) engine eliminates the intent. The column displays the R&R policy reason when available. Otherwise, it shows the expected intent scores from each engine in this format: FM: [score], ML: [score], FAQ: [score].

Batch Test Results for Universal Bots

The XO Platform now displays Batch Test Results for Universal bots for each test run in the summary view for FAQs, Dialog Intents, and Small Talk during Batch Testing. The actionable insights help understand the test coverage and NLP performance for each intent type in the Universal Bot. Learn more.

View Individual Test Run Summary

You can view the individual Batch Test Run summary of a test suite by clicking the View Summary icon of a test as shown below.

This displays the relevant NLP and Flow Health metrics on the Health and Monitoring Dashboard for intents mapped to Dialogs, FAQs, and Traits. Learn More.