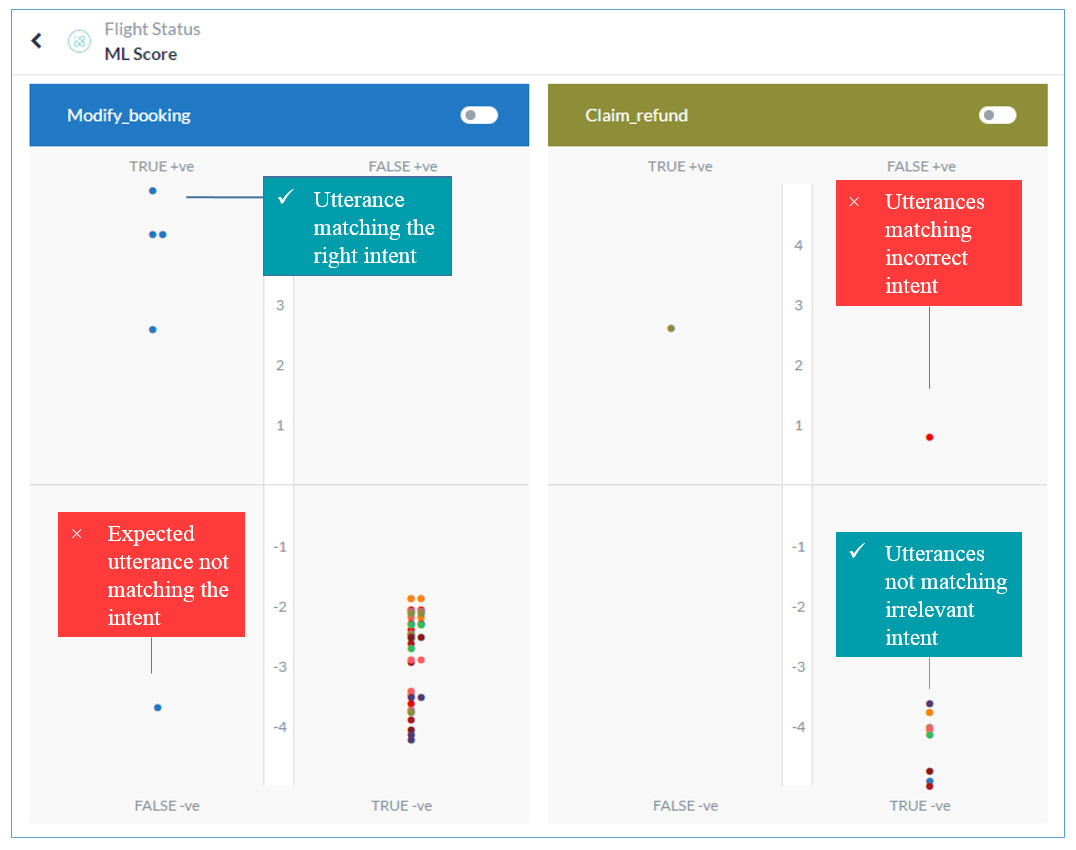

The Machine Learning Model (ML Model) graph presents an at-a-glance view of the performance of your trained utterances against the bot tasks.

The ML Model graph evaluates all the training utterances against each bot task and plots them into one of these quadrants of the task: True Positive (True +ve), True Negative (True -ve), False Positive (False +ve), False Negative (False -ve). A quick look at the graph and you know which utterance-intent matches are accurate and which can be further trained to produce better results.

The higher the utterance in the True quadrants the better it exhibits expected behavior – True +ve represents a strong match with the task for which they are trained and the True -ve represents a mismatch with the irrelevant intents as expected. Utterances at a moderate level in the True quadrants can be further trained for better scores.

The utterances falling into the False quadrants need immediate attention. These are the utterances that are either not matching with the intended tasks or are matching with the wrong ones. To read the utterance text in any quadrant, hover over the dot in the graph.

To open the ML Model graph, hover over the side navigation panel of the bot, and select Natural Language > Machine Learning Utterances > View ML Model.

True Positive Quadrant

When utterances trained for an intent receive positive confidence score for that intent, they fall into its True Positive quadrant. This quadrant represents a favorable outcome. However, the higher the utterance on the quadrant’s scale the more chances of it finding the right intent.

Note: Utterances that fall into the true positive quadrants of multiple bot tasks denote overlapping bot tasks that need to be fixed.

True Negative Quadrant

When utterances not trained for an intent to receive negative confidence score for the intent, they fall into its True Negative quadrant. This quadrant represents a favorable outcome as the utterance is not supposed to match with the intent. The lower the utterance on the quadrant’s scale, the higher the chances of it staying afar from the intent. All the utterances trained for a particular bot task should ideally fall into the True Negative quadrants of the other tasks.

False Positive Quadrant

When utterances that are not trained for an intent receive positive confidence score for the intent, they fall into its False Positive quadrant. This quadrant represents an unfavorable outcome. For such outcomes, the utterance, the intended bot task, and the incorrectly matching task may have to be trained for optimum result. Read Machine Learning to learn more.

False Negative Quadrant

When utterances trained for an intent receive negative confidence score for the intent, they fall into its False Negative quadrant. The quadrant represents an unfavorable outcome as the utterance is supposed to match with the intent. For such outcomes, the utterance, the intended bot task, and the task need to be trained for optimum outcome. Read Machine Learning to learn more.

Note: Make sure to click the train button after making any changes to your bot to reflect them in the ML Model Graph.

Consider the following key points when referring to the graph:

- The higher up the utterance in the True quadrants the better it exhibits the expected behavior

- Utterances at a moderate level in the True quadrants can be further trained for better scores.

- The utterances falling into the False quadrants need immediate attention.

- Utterances that fall into the true quadrants of multiple bot tasks denote overlapping bot tasks that need to be fixed.

Understanding a Good/Bad ML model

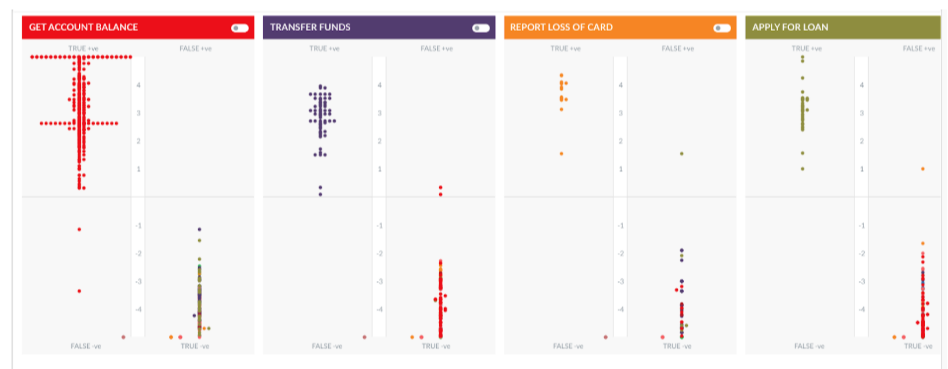

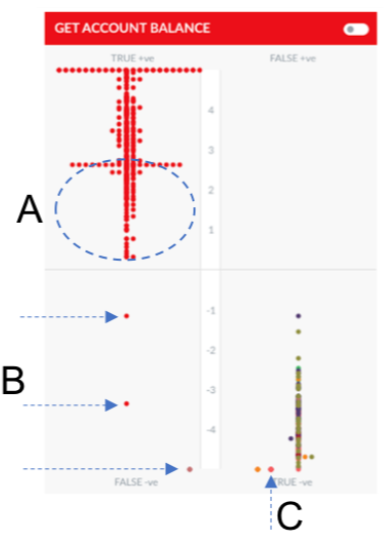

Let us consider a banking bot as an example for understanding Good or Bad ML Mode. The bot has multiple tasks with more than 300 trained utterances. The below Image depicts 4 tasks and the associated utterances.

The model in this scenario is fairly well trained with most of the utterances pertaining to a task are concentrated in the True Positive quadrant and most of the utterances for other tasks are in the True Negative quadrant.

The developer can work to improve on the following aspects of this model :

- In the ML model for task ‘Get Account Balance’ we can see a few utterances (B) in the False Positive quadrant.

- An utterance trained for ‘Get Account Balance’ appears in the True Negative quadrant (C).

- Though the model is well trained and most of the utterances for this task are higher up in the True Positive quadrant, some of the utterances still have a very low score. (A)

- When you hover over the dots, you can see the utterance. For A, B, and C, though the utterance should have exactly matched the intent, it has a low or negative score as a similar utterance has been trained for another intent.

Note: In such cases, it’s best to try the utterance with Test & Train module, check for the intents that the ML engine returns and the associated sample utterances. Fine tune the utterances and try again.

- The task named Report Loss of Card contains limited utterances which are concentrated together.

Let us now compare it with the ML Model for the Travel Bot below:

The model is trained with a lot of conflicting utterances, resulting in a scattered view of utterances. This will be considered as a bad model and will need to be re-trained with a smaller set of utterances that do not relate to multiple tasks in a bot.

Viewing the Graph for Specific Task Utterances

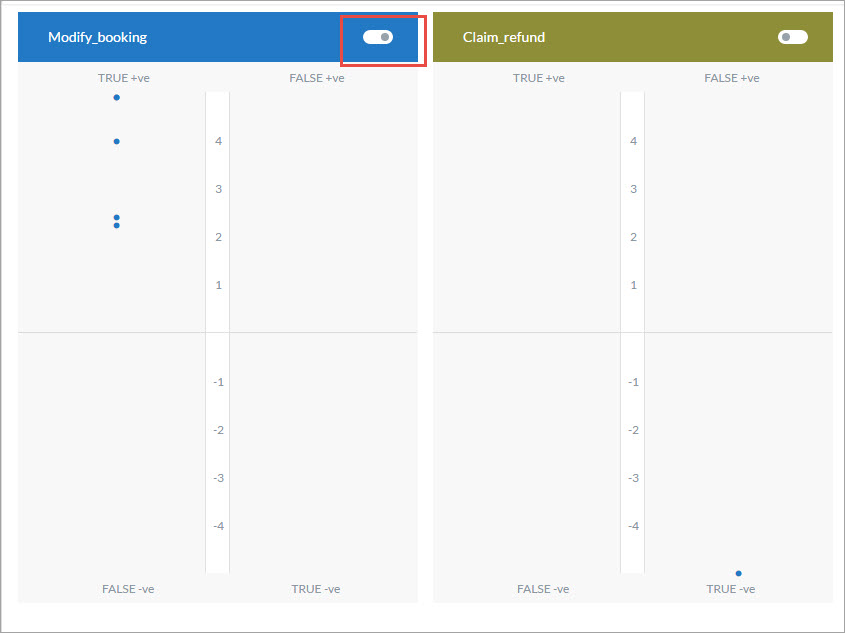

By default, the ML Model Graph shows the performance of all the trained utterances against all bot tasks. To view the performance of a specific bot task’s trained utterances against all the other intents, toggle the switch for the bot task as shown in the image below.

Note: Since these are the trained utterances of the first bot task, ideally the utterances should appear at the top of the True +ve quadrant of that task and the bottom of the True -ve quadrant of all the other tasks.

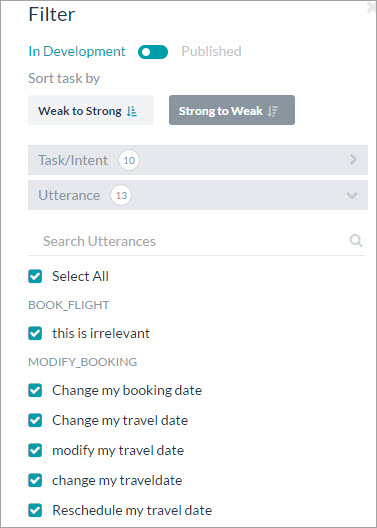

Filtering the ML Model Graph

You can filter the ML Model Graph based on the following criteria:

- In Development or Published: By default the graph shows the graph for all the tasks In Development and Published tasks. Toggle the switch to view the graph for only the published tasks.

- Weak to Strong: View the graph from the least accurate tasks scores to the most accurate.

- Strong to Weak: View the graph from the most accurate task scores to the least.

- Task/Intent: Select all or select specific task names to view their graph.

- Utterance: Select all or select specific trained utterances to view their graph.

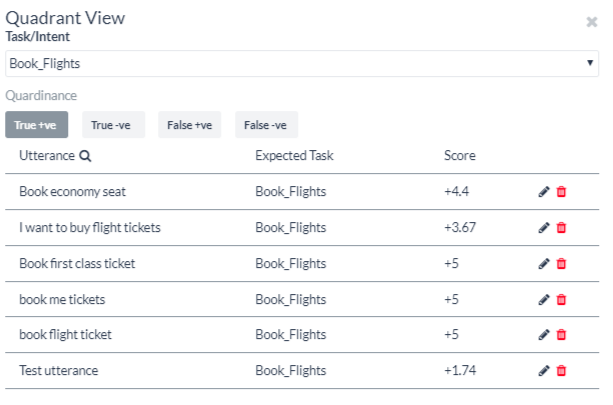

Editing and Remapping Utterances

You can edit user utterances and re-assign them to other tasks to improve their scores directly from the ML Graph. To do so click on a quadrant and the Quadrant view opens with the name of the task and all the utterances related to it.

To edit an individual utterance, click the edit icon against it. The edit utterance window opens. You can make changes to the text in the Utterance field or reassign the utterance to another task using the Expected Task drop-down list.