The key for a conversational bot to understand human interactions lies in its ability to identify the intention of the user, extract useful information from their utterance, and map them to relevant actions or tasks. NLP (Natural Language Processing) is the science of deducing the intention (Intent) and related information (Entity) from natural conversations.

NLP Approach

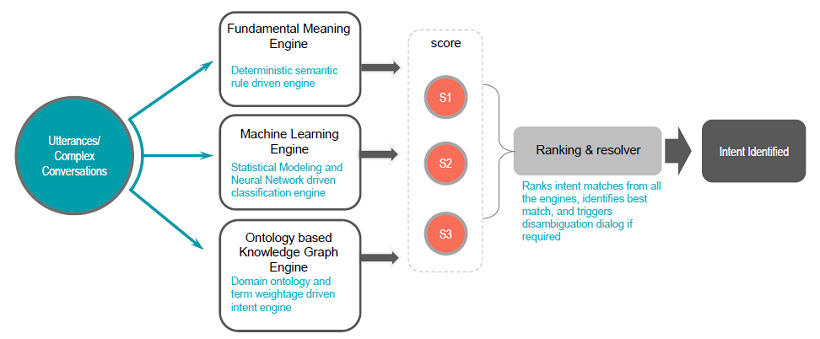

The Kore.ai Bots platform employs a multi-pronged approach to natural language, which combines the following two models for optimal outcomes:

- Fundamental Meaning: A computational linguistics approach that is built on ChatScript. The model analyzes the structure of a user’s utterance to identify each word by meaning, position, conjugation, capitalization, plurality, and other factors.

- Custom Machine Learning (ML): Kore.ai uses state-of-the-art NLP algorithms and models for machine learning.

- Ontology-based Knowledge Graph Engine (KG): Kore.ai Knowledge Graph helps you turn your static FAQ text into an intelligent and personalized conversational experience.

With its three-fold approach, the Kore.ai Bots platform enables you to instantly build conversational bots that can respond to 70% of conversations with no language training to get started. It automatically enables the NLP capabilities to all built-in and custom bots, and powers the way chatbots communicate, understand, and respond to a user request.

Kore.ai team developed a hybrid NLP strategy, without third-party vendors’ services. This strategy in addition to detecting and performing tasks (Fundamental Meaning) provides an ability to build FAQ bots that return static responses.

The platform uses a Knowledge Graph-based model that provides the intelligence required to represent the key domain terms and their relationships in identifying the user’s intent (in this case the most appropriate question).

Machine Learning models append the Knowledge Graph to further arrive at the right knowledge query.

Once all the engines return scores and recommendations, Kore.ai has a Ranking and Resolver engine that determines the winning intent based on the user utterance.

Advantages of Kore.ai Approach

Most products only use machine learning (ML) for natural language processing. An ML-only approach requires extensive training of the bot for high success rates. Inadequate training can cause inaccurate results. As training data, one must provide a collection of sentences (utterances) that match a chatbot’s intended goal and eventually a group of sentences that do not. When the bot uses ML, it does not understand an input sentence. Instead, it measures the similarity of data input to the training data imparted to it.

Our approach combines Fundamental Meaning (FM), Machine Learning (ML), and Knowledge Graph (KG) making it easy to build natural language capable chatbots, irrespective of the extensiveness of training provided to the bot. Enterprise developers can solve real-world dynamics by leveraging the inherent benefits of these approaches and eliminating their individual shortcomings.

Intent Detection

Chatbot tasks are broken down to fewer words that describe what a user intends to do, usually a verb and a noun such as Find an ATM, Create an event, Search for an item, Send an alert, or Transfer fund.

Kore.ai’s NLP engine analyzes the structure of a user’s utterance to identify each word by meaning, position, conjugation, capitalization, plurality, and other factors. This analysis helps the chatbot to correctly interpret and understand the common action words.

The goal of intent recognition is not just to match an utterance with a task, it is to match an utterance with its correctly intended task. We do this by matching verbs and nouns with as many obvious and non-obvious synonyms as possible. In doing so, enterprise developers can solve real-world dynamics and gain the inherent benefits of both ML and FM approaches, while eliminating the shortcomings of the individual methods.

Machine Learning Model Training

Developers need to provide sample utterances for each intent (task) the bot needs to identify to train the machine learning model. The platform ML engine builds a model that tries to map a user utterance to one of the bot intents.

Kore.ai’s Bots platform allows fully unsupervised machine learning to constantly expand the language capabilities of your chatbot without human intervention. Unlike the other unsupervised models in which chatbots learn from any good or bad input, the Kore.ai Bots platform enables chatbots to automatically increase their vocabulary only when the chatbot successfully recognizes the intent and extracts the entities of a human’s request to complete a task.

However, we recommend supervised learning enabled to monitor the bot performance and manually tune when required. Using the bots platform, developers can evaluate all interaction logs, easily change NL settings for failed scenarios, and use the learnings to retrain the bot for better conversations. Learn more about adding utterances.

Fundamental Meaning Model Training

The fundamental meaning model creates a form of the input with the canonical version of each word in the user utterance. It converts

- verbs into their infinitive

- nouns are made singular

- numbers become digits

The intent recognition process then uses this canonical form for matching. The original input form is still available and is referenced for certain entities like proper names where there isn’t a canonical form.

The Fundamental Meaning model considers parts of speech and inbuilt concepts to identify each word in the user utterance and relate it with the intents the bot can perform. The scoring is based on the number of words matched, total word coverage, and more.

The platform provides the following tools to train the Fundamental Meaning engine:

- Patterns: Using Patterns you can define slang, metaphors, or other idiomatic expressions for task names. Learn more about patterns along with examples.

- Synonyms: The Platform includes a built-in synonym library for common terms. Developers can further optimize the accuracy of the NLP engine by adding synonyms for bot names, words used in the names of your tasks and task fields, and any words associated with your dialog task entity node. The platform auto-corrects domain words unless they are specially trained. For example, Paracetamol, IVR. Learn more about synonyms along with examples.

The platform also facilitates a default dialog option which is initiated automatically if the platform fails to identify an intent from a user utterance. Developers can modify the dialog based on the bot requirement. We also provide the ability for a human reviewer (developer, customer, support personnel, and more) to passively review user utterances and mark the ones that need further training. Once trained, the bot recognizes the utterances based on the newly trained model.

Entity Detection

Once the intent is detected from a user utterance, the bot needs additional information to trigger the task. This additional information is termed as entities.

Entities are fields, data, or words the developer designates necessary for the chatbot to complete a task like a date, time, person, location, description of an item or a product, or any number of other designations. Through the Kore.ai NLP engine, the bot identifies words from a user’s utterance to ensure the availability of fields for the matched task or collects additional field data if needed.

The goal of entity extraction is to fill the gaps to complete the task while ignoring unnecessary details. It is a subtractive process to get the necessary info, whether the user provides all at once or through a guided conversation with the chatbot. The platform supports the identification and extraction of 20+ system entities out of the box. Read more about entities.

You can use the following two approaches to train the bot to identify entities in the user utterance:

- Named Entity Recognition (NER) based on Machine Learning.

- Entity pattern definition and synonyms.

The entity training is optional, and without it, Kore.ai can still extract entities. But the training helps to guide the engine as to where to look in the input. Kore.ai still validates the value found and moves on to another location if those words are not suitable. For example, if a Number entity pattern was for * people but the user says for nice people, the bot understands that nice is not a number and will continue searching. Know more from here.

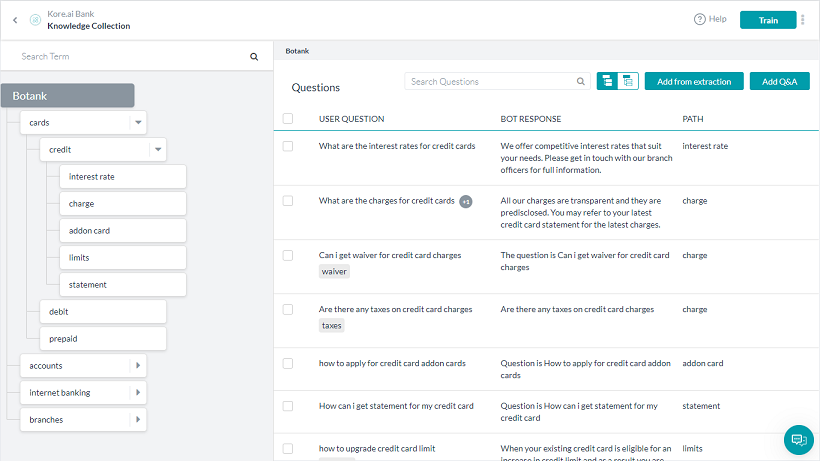

Knowledge Graph

Kore.ai Knowledge Graph goes beyond the usual practice of capturing FAQs in the form of flat question-answer pairs. Instead, the Knowledge Graph enables you to create a hierarchical structure of key domain terms and associate them with context-specific questions and their alternatives, synonyms, and machine learning-enabled classes.

The Knowledge Graph requires less training and enables word importance with lesser false positives for terms marked as mandatory.

The following image provides an overview of a Knowledge Graph for sample FAQs of a bank. Click here to learn more.